8 Modeling US Census data

The previous chapter included a range of examples illustrating methods for analyzing and exploring spatial datasets. Census data can also be used to derive models for explaining patterns that occur across regions or within cities. These models draw from concepts introduced in prior chapters, but can also be used as part of explanatory frameworks or within broader analytic pipelines for statistical inference or machine learning. This chapter introduces a series of such frameworks. The first section looks at segregation and diversity indices which are widely used across the social sciences to explain demographic patterns. The second section explores topics in statistical modeling, including methods for spatial regression that take into account the spatial autocorrelation inherent in most Census variables. The third and final section explores concepts such as classification, clustering, and regionalization which are common in both unsupervised and supervised machine learning. Examples will illustrate how to use Census data to generate neighborhood typologies, which are widely used for business and marketing applications, and how to generate spatially coherent sales territories from Census data with regionalization.

8.1 Indices of segregation and diversity

A large body of research in the social sciences is concerned with neighborhood segregation and diversity. Segregation as addressed here generally refers to the measurement of the extent to which two or more groups live apart from each other; diversity as a companion metric measures neighborhood heterogeneity among groups. A wide range of indices have been developed by social scientists to measure segregation and diversity, and in many cases are inherently linked with spatial Census data which are often the best way to measure these concepts. Segregation and diversity indices are implemented in a variety of different R packages; the package recommended by this book is the segregation package (Elbers 2021), which includes R functions for a variety of regional and local indices.

8.1.1 Data setup with spatial analysis

Much of the segregation and diversity literature focuses on race and ethnicity, which will be explored in the example below. The data setup code uses spatial methods covered in the previous three chapters to acquire Census tract-level data on population estimates for non-Hispanic white, non-Hispanic black, non-Hispanic Asian, and Hispanic populations in California, then filters those Census tracts those that intersect the largest urbanized areas by population in the state using an inner spatial join. In turn, it is an illustrative example of how spatial analysis tools can be important parts of data setup workflows for analysis. As urbanized areas for the 2020 Census are not yet defined at the time of this writing, we’ll be using urbanized areas for 2019 and data from the 2015-2019 ACS.

library(tidycensus)

library(tidyverse)

library(segregation)

library(tigris)

library(sf)

# Get California tract data by race/ethnicity

ca_acs_data <- get_acs(

geography = "tract",

variables = c(

white = "B03002_003",

black = "B03002_004",

asian = "B03002_006",

hispanic = "B03002_012"

),

state = "CA",

geometry = TRUE,

year = 2019

)

# Use tidycensus to get urbanized areas by population with geometry,

# then filter for those that have populations of 750,000 or more

us_urban_areas <- get_acs(

geography = "urban area",

variables = "B01001_001",

geometry = TRUE,

year = 2019,

survey = "acs1"

) %>%

filter(estimate >= 750000) %>%

transmute(urban_name = str_remove(NAME,

fixed(", CA Urbanized Area (2010)")))

# Compute an inner spatial join between the California tracts and the

# urbanized areas, returning tracts in the largest California urban

# areas with the urban_name column appended

ca_urban_data <- ca_acs_data %>%

st_join(us_urban_areas, left = FALSE) %>%

select(-NAME) %>%

st_drop_geometry()To summarize, the spatial analysis workflow detailed above uses the following steps:

- Data on race & ethnicity from the 2015-2019 5-year ACS for the four largest demographic groups in California is acquired with tidycensus’s

get_acs()at the Census tract level with feature geometry included. Depending on the goals of the study, other racial/ethnic groups (e.g. native American, native Hawaiian/Pacific Islander) should be added or removed as needed. - As urban areas as defined by the Census Bureau often cross state boundaries, urban areas must be obtained for the entire US with

get_acs(). Once obtained, urban areas are filtered to only those areas with populations of 750,000 or greater, and thentransmute()is used to retain only a new column representing the area name (along with the simple feature geometry column). - A spatial join between the Census tract data and the urban area data is computed with

st_join(). The argumentleft = FALSEcomputes an inner spatial join, which retains only those Census tracts that intersect the urban area boundaries, and appends the correspondingurban_namecolumn to each Census tract.

The data structure appears as follows:

| GEOID | variable | estimate | moe | urban_name |

|---|---|---|---|---|

| 06013370000 | white | 1235 | 166 | San Francisco–Oakland |

| 06013370000 | black | 371 | 149 | San Francisco–Oakland |

| 06013370000 | asian | 540 | 88 | San Francisco–Oakland |

| 06013370000 | hispanic | 557 | 121 | San Francisco–Oakland |

| 06001442301 | white | 969 | 197 | San Francisco–Oakland |

| 06001442301 | black | 214 | 129 | San Francisco–Oakland |

| 06001442301 | asian | 3000 | 259 | San Francisco–Oakland |

| 06001442301 | hispanic | 1010 | 272 | San Francisco–Oakland |

The data are in long (tidy) form, the default used by tidycensus; this data structure is ideal for computing indices in the segregation package.

8.1.2 The dissimilarity index

The dissimilarity index is widely used to assess neighborhood segregation between two groups within a region. It is computed as follows:

\[ D = \frac{1}{2}\sum\limits_{i=1}^{N}\left |\frac{a_i}{A}-\frac{b_i}{B} \right | \]

where \(a_i\) represents the population of group \(A\) in a given areal unit \(i\); \(A\) is the total population of that group in the study region (e.g. a metropolitan area); and \(b_i\) and \(B\) are the equivalent metrics for the second group. The index ranges from a low of 0 to a high of 1, where 0 represents perfect integration between the two groups and 1 represents complete segregation. This index is implemented in the segregation package with the dissimilarity() function.

The example below computes the dissimilarity index between non-Hispanic white and Hispanic populations for the San Francisco/Oakland urbanized area. The data are filtered for only those rows that represent the target populations in the San Francisco/Oakland area, which is then piped to the dissimilarity() function. The function requires identification of a group column, for which we’ll use variable; a unit column representing the neighborhood unit, for which we’ll use GEOID to represent the Census tract; and a weight column that tells the function how many people are in each group.

ca_urban_data %>%

filter(variable %in% c("white", "hispanic"),

urban_name == "San Francisco--Oakland") %>%

dissimilarity(

group = "variable",

unit = "GEOID",

weight = "estimate"

)## stat est

## 1: D 0.5135526The \(D\) index of segregation between non-Hispanic white and Hispanic populations in the San Francisco-Oakland area is 0.51. This statistic, however, is more meaningful in comparison with other cities. To compute dissimilarity for each urban area, we can creatively apply tidyverse techniques covered in earlier chapters and introduce a new function, group_modify(), for group-wise calculation. This example follows the recommended workflow in the segregation package documentation. The code below filters the data for non-Hispanic white and Hispanic populations by Census tract, then groups the dataset by values in the urban_name column. The group_modify() function from dplyr then allows for the calculation of dissimilarity indices by group, which in this example is Census tracts within each respective urban area. It returns a combined dataset that is sorted in descending order with arrange() to make comparisons.

ca_urban_data %>%

filter(variable %in% c("white", "hispanic")) %>%

group_by(urban_name) %>%

group_modify(~

dissimilarity(.x,

group = "variable",

unit = "GEOID",

weight = "estimate"

)

) %>%

arrange(desc(est))| urban_name | stat | est |

|---|---|---|

| Los Angeles–Long Beach–Anaheim | D | 0.5999229 |

| San Francisco–Oakland | D | 0.5135526 |

| San Jose | D | 0.4935633 |

| San Diego | D | 0.4898184 |

| Riverside–San Bernardino | D | 0.4079863 |

| Sacramento | D | 0.3687927 |

The Los Angeles area is the most segregated of the large urbanized areas in California with respect to non-Hispanic white and Hispanic populations at the Census tract level, followed by San Francisco/Oakland. Riverside/San Bernardino and Sacramento are the least segregated of the large urban areas in the state.

8.1.3 Multi-group segregation indices

One disadvantage of the dissimilarity index is that it only measures segregation between two groups. For a state as diverse as California, we may be interested in measuring segregation and diversity between multiple groups at a time. The segregation package implements two such indices: the Mutual Information Index \(M\), and Theil’s Entropy Index \(H\) (Mora and Ruiz-Castillo 2011). Following Elbers (2021), \(M\) is computed as follows for a dataset \(T\):

\[ M(\mathbf{T})=\sum_{u=1}^U\sum_{g=1}^Gp_{ug}\log\frac{p_{ug}}{p_{u}p_{g}} \]

where \(U\) is the total number of units \(u\), \(G\) is the total number of groups \(g\), and \(p_{ug}\) is the joint probability of being in unit \(u\) and group \(g\), with \(p_u\) and \(p_g\) referring to unit and group probabilities. Theil’s \(H\) for the same dataset \(T\) can then be written as:

\[ H(\mathbf{T})=\frac{M(\mathbf{T})}{E(\mathbf{T})} \]

where \(E(T)\) is the entropy of \(T\), normalizing \(H\) to range between values of 0 and 1.

Computing these indices is straightforward with the segregation package. The mutual_total() function computes both indices; when different regions are to be considered (like multiple urban areas, as in this example) the mutual_within() function will compute \(M\) and \(H\) by urban area with the within argument appropriately specified. We’ll be using the full ca_urban_data dataset, which includes population estimates for non-Hispanic white, non-Hispanic Black, non-Hispanic Asian, and Hispanic populations.

mutual_within(

data = ca_urban_data,

group = "variable",

unit = "GEOID",

weight = "estimate",

within = "urban_name",

wide = TRUE

)| urban_name | M | p | H | ent_ratio |

|---|---|---|---|---|

| Los Angeles–Long Beach–Anaheim | 0.3391033 | 0.5016371 | 0.2851662 | 0.9693226 |

| Riverside–San Bernardino | 0.1497129 | 0.0867808 | 0.1408461 | 0.8664604 |

| Sacramento | 0.1658898 | 0.0736948 | 0.1426804 | 0.9477412 |

| San Diego | 0.2290891 | 0.1256072 | 0.2025728 | 0.9218445 |

| San Francisco–Oakland | 0.2685992 | 0.1394522 | 0.2116127 | 1.0346590 |

| San Jose | 0.2147445 | 0.0728278 | 0.1829190 | 0.9569681 |

When multi-group segregation is considered using these indices, Los Angeles remains the most segregated urban area, whereas Riverside/San Bernardino is the least segregated.

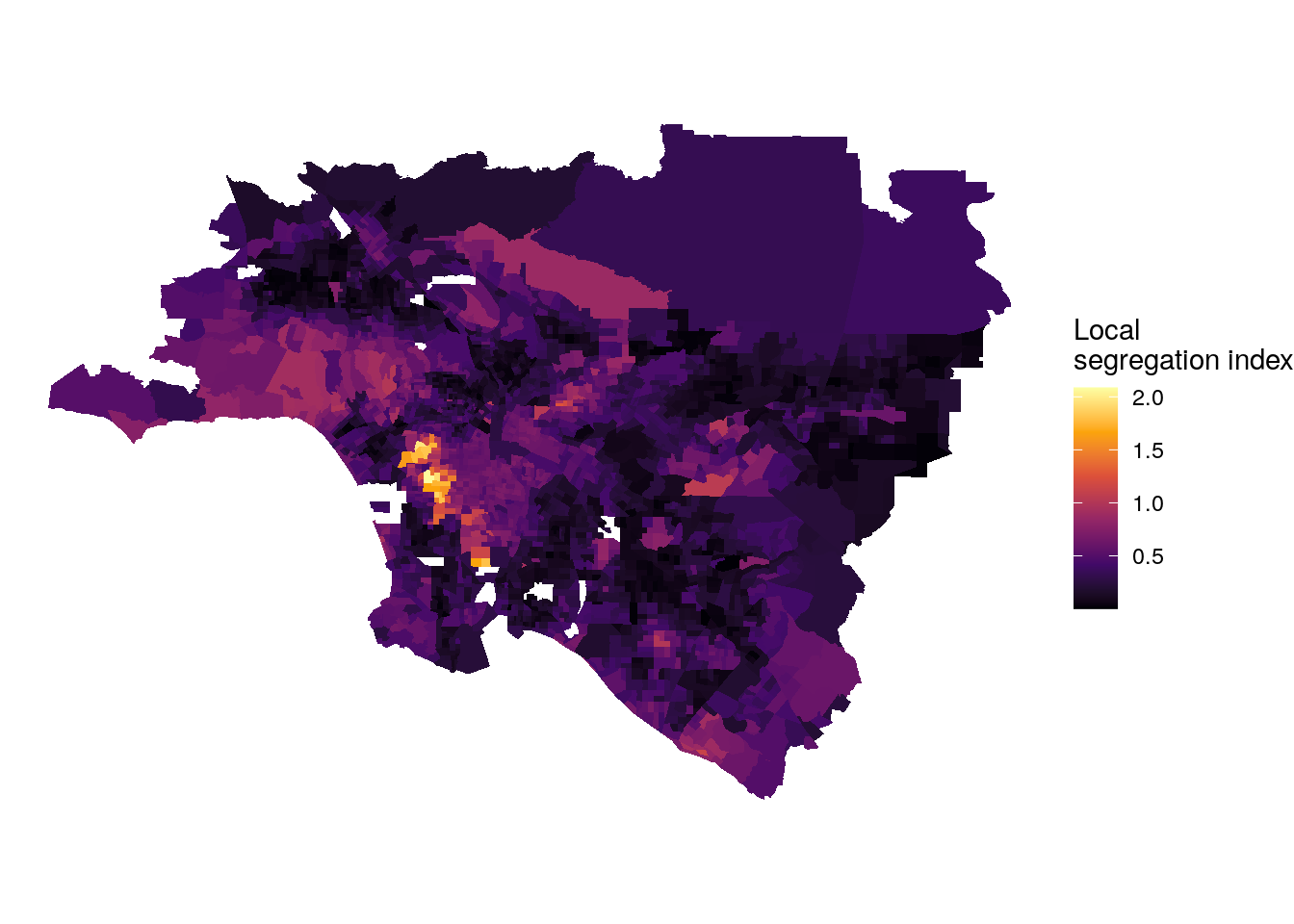

The segregation package also offers a function for local segregation analysis, mutual_local(), which decomposes \(M\) into unit-level segregation scores, represented by ls. In the example below, we will use mutual_local() to examine patterns of segregation across the most segregated urban area, Los Angeles.

la_local_seg <- ca_urban_data %>%

filter(urban_name == "Los Angeles--Long Beach--Anaheim") %>%

mutual_local(

group = "variable",

unit = "GEOID",

weight = "estimate",

wide = TRUE

)| GEOID | ls | p |

|---|---|---|

| 06037101110 | 0.2821846 | 0.0003363 |

| 06037101122 | 0.7790480 | 0.0002690 |

| 06037101210 | 0.1012193 | 0.0005088 |

| 06037101220 | 0.1182334 | 0.0002917 |

| 06037101300 | 0.6538220 | 0.0003094 |

| 06037101400 | 0.3951408 | 0.0002655 |

| 06037102103 | 0.3039904 | 0.0001384 |

| 06037102104 | 0.4462187 | 0.0002836 |

| 06037102105 | 0.1284913 | 0.0001496 |

| 06037102107 | 0.2389721 | 0.0003454 |

The results can be mapped by joining the data to a dataset of Census tracts from tigris; the inner_join() function is used to retain tracts for the Los Angeles area only.

la_tracts_seg <- tracts("CA", cb = TRUE, year = 2019) %>%

inner_join(la_local_seg, by = "GEOID")

la_tracts_seg %>%

ggplot(aes(fill = ls)) +

geom_sf(color = NA) +

coord_sf(crs = 26946) +

scale_fill_viridis_c(option = "inferno") +

theme_void() +

labs(fill = "Local\nsegregation index")

Figure 8.1: Map of local multi-group segregation scores in Los Angeles

8.1.4 Visualizing the diversity gradient

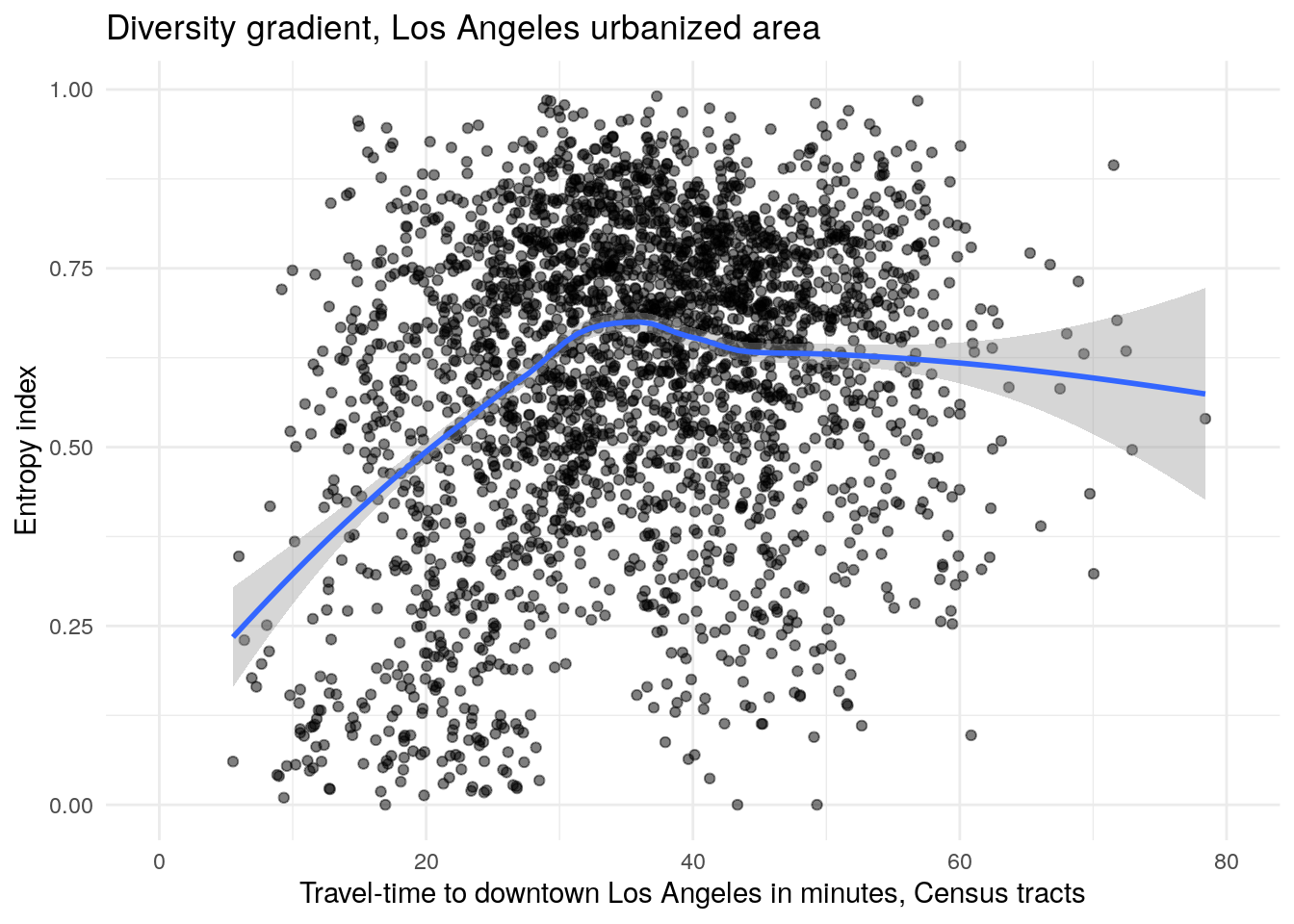

The diversity gradient is a concept that uses scatterplot smoothing to visualize how neighborhood diversity varies by distance or travel-time from the core of an urban region (K. Walker 2016b). Historically, literature on suburbanization in the social sciences assumes a more heterogeneous urban core relative to segregated and homogeneous suburban neighborhoods. The diversity gradient is a visual heuristic used to evaluate the validity of this demographic model.

The entropy index for a given geographic unit is calculated as follows:

\[ E = \sum\limits_{r=1}^{n}Q_rln\frac{1}{Q_r} \]

\(Q_r\) in this calculation represents group \(r\)’s proportion of the population in the geographic unit.

This statistic is implemented in the entropy() function in the segregation package. As the entropy() function calculates this statistic for a specific unit at a time, we will group the data by tract, and then use group_modify() to calculate the entropy for each tract separately. The argument base = 4 is set by convention to the number of groups in the calculation; this sets the maximum value of the statistic to 1, which represents perfect evenness between the four groups in the area. Once computed, the indices are joined to a dataset of Census tracts from California; inner_join() is used to retain only those tracts in the Los Angeles urbanized area.

la_entropy <- ca_urban_data %>%

filter(urban_name == "Los Angeles--Long Beach--Anaheim") %>%

group_by(GEOID) %>%

group_modify(~data.frame(entropy = entropy(

data = .x,

group = "variable",

weight = "estimate",

base = 4)))

la_entropy_geo <- tracts("CA", cb = TRUE, year = 2019) %>%

inner_join(la_entropy, by = "GEOID")Visualization of the diversity gradient then requires a relative measurement of how far each Census tract is from the urban core. The travel-time methods available in the mapboxapi package introduced in Chapter 7 are again used here to calculate driving distance to Los Angeles City Hall for all Census tracts in the Los Angeles urbanized area.

library(mapboxapi)

la_city_hall <- mb_geocode("City Hall, Los Angeles CA")

minutes_to_downtown <- mb_matrix(la_entropy_geo, la_city_hall)Once computed, the travel times are stored in a vector minutes_to_downtown, then assigned to a new column minutes in the entropy data frame. The tract diversity index is visualized using ggplot2 relative to its travel time to downtown Los Angeles, with a LOESS smoother superimposed over the scatterplot to represent the diversity gradient.

la_entropy_geo$minutes <- as.numeric(minutes_to_downtown)

ggplot(la_entropy_geo, aes(x = minutes_to_downtown, y = entropy)) +

geom_point(alpha = 0.5) +

geom_smooth(method = "loess") +

theme_minimal() +

scale_x_continuous(limits = c(0, 80)) +

labs(title = "Diversity gradient, Los Angeles urbanized area",

x = "Travel-time to downtown Los Angeles in minutes, Census tracts",

y = "Entropy index")

Figure 8.2: Diversity gradient visualization for the Los Angeles, CA urbanized area

The visualization of the diversity gradient shows that neighborhood diversity increases with driving time from the urban core in Los Angeles, peaking at about 35 minutes in free-flowing traffic from the urban core then leveling off after that. The structure of the diversity gradient suggests that Census tracts near to downtown tend to be segregated, and suburban tracts more likely to be integrated.

8.2 Regression modeling with US Census data

Regression modeling is widely used in industry and the social sciences to understand social processes. In the social sciences, the goal of regression modeling is commonly to understand the relationships between a variable under study, termed an outcome variable, and one or more predictors that are believed to have some influence on the outcome variable. Following James et al. (2013), a model can be represented with the following general notation:

\[ Y = f(X) + \epsilon \]

where \(Y\) represents the outcome variable; \(X\) represents one or more predictors hypothesized to have some influence on the outcome variable; \(f\) is a function that represents the relationships between \(X\) and \(Y\); and \(\epsilon\) represents the error terms or residuals, the differences between the modeled values of \(Y\) and the actual values. The function \(f\) will be estimated using a method appropriate for the structure of the data and selected by the analyst.

A complete treatment of regression modeling is beyond the scope of this book; recommended resources include James et al. (2013), Boehmke and Greenwell (2019), Çetinkaya-Rundel and Hardin (2021), and Matloff (2017). The purpose of this section is to illustrate an example workflow using regression modeling to analyze data from the American Community Survey. The section will start with a simple linear model and extend its discussion from there. In doing so, some problems with the application of the linear model to aggregated Census data will be discussed. First, demographic statistics are often highly correlated with one another, meaning that Census data-based models risk collinearity where predictors are not independent of one another. Second, spatial demographic data commonly exhibit spatial autocorrelation, which may lead to a violation of the assumption of independent and identically distributed error terms (\(i.i.d\)) in the linear model. Suggested approaches for addressing these problems discussed in this section include dimension reduction and spatial regression.

8.2.1 Data setup and exploratory data analysis

The topic of study in this illustrative applied workflow will be median home value by Census tract in the Dallas-Fort Worth metropolitan area. To get started, we’ll define several counties in north Texas that we’ll use to represent the DFW region, and use a named vector of variables to acquire data that will represent both our outcome variable and predictors. Data are returned from tidycensus with the argument output = "wide", giving one column per variable. The geometry is also transformed to an appropriate coordinate reference system for North Texas, EPSG code 32138 (NAD83 / Texas North Central with meters for measurement units).

library(tidycensus)

library(sf)

dfw_counties <- c("Collin County", "Dallas", "Denton",

"Ellis", "Hunt", "Kaufman", "Rockwall",

"Johnson", "Parker", "Tarrant", "Wise")

variables_to_get <- c(

median_value = "B25077_001",

median_rooms = "B25018_001",

median_income = "DP03_0062",

total_population = "B01003_001",

median_age = "B01002_001",

pct_college = "DP02_0068P",

pct_foreign_born = "DP02_0094P",

pct_white = "DP05_0077P",

median_year_built = "B25037_001",

percent_ooh = "DP04_0046P"

)

dfw_data <- get_acs(

geography = "tract",

variables = variables_to_get,

state = "TX",

county = dfw_counties,

geometry = TRUE,

output = "wide",

year = 2020

) %>%

select(-NAME) %>%

st_transform(32138) # NAD83 / Texas North Central| GEOID | median_valueE | median_valueM | median_roomsE | median_roomsM | total_populationE | total_populationM | median_ageE | median_ageM | median_year_builtE | median_year_builtM | median_incomeE | median_incomeM | pct_collegeE | pct_collegeM | pct_foreign_bornE | pct_foreign_bornM | pct_whiteE | pct_whiteM | percent_oohE | percent_oohM | geometry |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 48085030101 | 183600 | 11112 | 6.0 | 0.3 | 2296 | 420 | 31.9 | 5.6 | 1994 | 6 | 63036 | 25030 | 8.6 | 4.3 | 16.5 | 9.7 | 65.4 | 12.6 | 85.5 | 6.0 | MULTIPOLYGON (((787828.3 21… |

| 48085030102 | 198500 | 88036 | 6.0 | 0.7 | 2720 | 524 | 45.5 | 4.4 | 1995 | 4 | 65234 | 19074 | 19.2 | 6.5 | 20.0 | 9.0 | 68.8 | 9.8 | 78.6 | 9.7 | MULTIPOLYGON (((784027.9 21… |

| 48085030201 | 324200 | 47203 | 7.4 | 0.6 | 3653 | 509 | 35.1 | 5.6 | 2002 | 3 | 85938 | 12350 | 41.3 | 6.6 | 4.9 | 2.7 | 77.0 | 7.8 | 79.2 | 7.1 | MULTIPOLYGON (((774843.6 21… |

| 48085030202 | 366900 | 20014 | 7.3 | 0.4 | 3530 | 418 | 42.1 | 3.3 | 2001 | 4 | 134097 | 24830 | 40.9 | 5.6 | 8.3 | 4.6 | 72.5 | 8.5 | 88.3 | 4.7 | MULTIPOLYGON (((764886.2 21… |

| 48085030204 | 217200 | 14152 | 6.2 | 0.8 | 6592 | 1193 | 32.7 | 4.4 | 2006 | 1 | 98622 | 6630 | 51.8 | 15.8 | 9.7 | 5.3 | 63.0 | 10.0 | 63.6 | 18.2 | MULTIPOLYGON (((778099.2 21… |

| 48085030205 | 231200 | 41519 | 6.3 | 0.8 | 5257 | 1273 | 30.4 | 1.6 | 2007 | 3 | 75382 | 41296 | 31.7 | 12.2 | 28.3 | 15.0 | 45.9 | 17.5 | 82.2 | 11.7 | MULTIPOLYGON (((777818.4 21… |

The ACS estimates we’ve acquired include:

median_valueE: The median home value of the Census tract (our outcome variable);median_roomsE: The median number of rooms for homes in the Census tract;total_populationE: The total population;median_ageE: The median age of the population in the Census tract;median_year_builtE: The median year built of housing structures in the tract;median_incomeE: The median income of households in the Census tract;pct_collegeE: The percentage of the population age 25 and up with a four-year college degree;pct_foreign_bornE: The percentage of the population born outside the United States;pct_whiteE: The percentage of the population that identifies as non-Hispanic white;percent_oohE: The percentage of housing units in the tract that are owner-occupied.

8.2.2 Inspecting the outcome variable with visualization

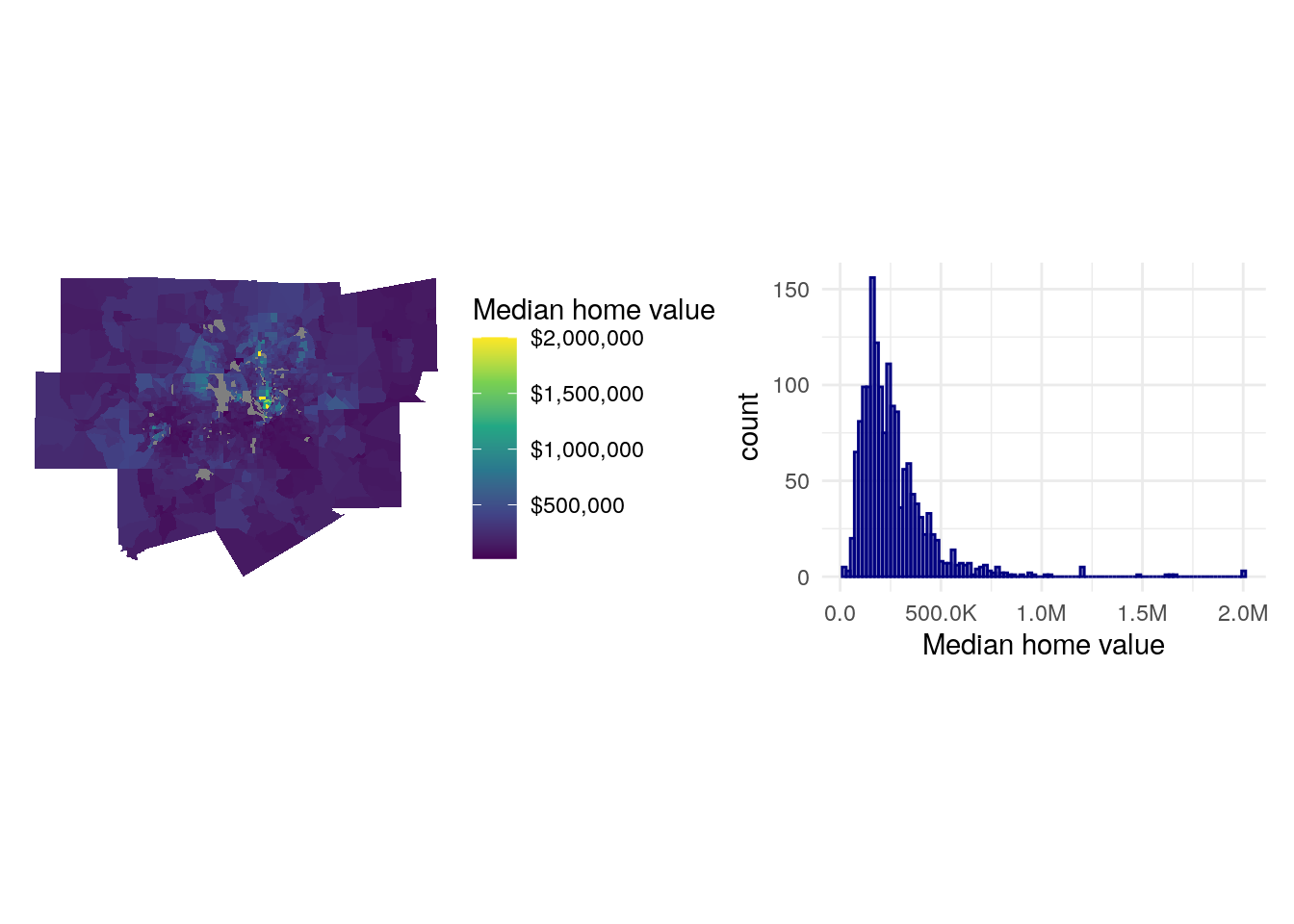

To get started, we will examine both the geographic and data distributions of our outcome variable, median home value, with a quick map with geom_sf() and a histogram.

library(tidyverse)

library(patchwork)

mhv_map <- ggplot(dfw_data, aes(fill = median_valueE)) +

geom_sf(color = NA) +

scale_fill_viridis_c(labels = scales::label_dollar()) +

theme_void() +

labs(fill = "Median home value ")

mhv_histogram <- ggplot(dfw_data, aes(x = median_valueE)) +

geom_histogram(alpha = 0.5, fill = "navy", color = "navy",

bins = 100) +

theme_minimal() +

scale_x_continuous(labels = scales::label_number_si(accuracy = 0.1)) +

labs(x = "Median home value")

mhv_map + mhv_histogram

Figure 8.3: Median home value charts

As is common with home values in metropolitan regions, the data distribution is right-skewed with a clustering of Census tracts on the lower end of the distribution of values and a long tail of very expensive areas, generally located north of downtown Dallas. This can lead to downstream violations of normality in model residuals. In turn, we might consider log-transforming our outcome variable, which will make its distribution closer to normal and will better capture the geographic variations in home values that we are trying to model.

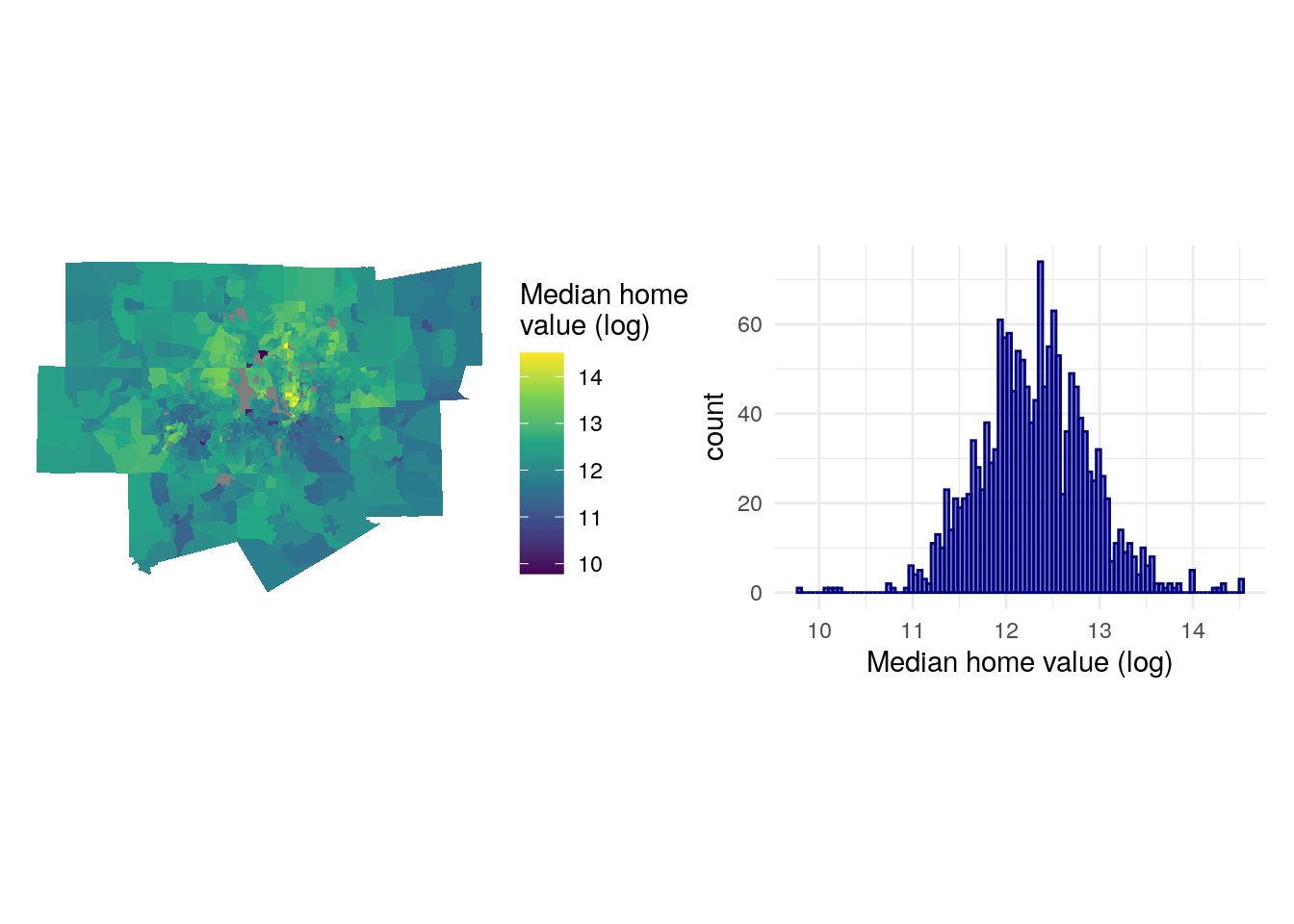

library(tidyverse)

library(patchwork)

mhv_map_log <- ggplot(dfw_data, aes(fill = log(median_valueE))) +

geom_sf(color = NA) +

scale_fill_viridis_c() +

theme_void() +

labs(fill = "Median home\nvalue (log)")

mhv_histogram_log <- ggplot(dfw_data, aes(x = log(median_valueE))) +

geom_histogram(alpha = 0.5, fill = "navy", color = "navy",

bins = 100) +

theme_minimal() +

scale_x_continuous() +

labs(x = "Median home value (log)")

mhv_map_log + mhv_histogram_log

Figure 8.4: Logged median home value charts

The expensive areas of north Dallas still stand out, but the log-transformation makes the distribution of values more normal and better shows geographic variation of home values on the map. This suggests that we require some data preparation prior to fitting the model.

8.2.3 “Feature engineering”

A common term used when preparing data for regression modeling is “feature engineering,” which refers to the transformation of predictors in ways that better represent the relationships between those predictors and the outcome variable. Many of the variables acquired from the ACS in the steps above are already “pre-engineered” as they were returned as percentages from the ACS data profile, saving some steps. However, some variables would benefit from additional transformation.

The code below creates two new variables: pop_density, which represents the number of people in each Census tract per square kilometer, and median_structure_age, which represents the median age of housing structures in the tract.

library(sf)

library(units)

dfw_data_for_model <- dfw_data %>%

mutate(pop_density = as.numeric(set_units(total_populationE / st_area(.), "1/km2")),

median_structure_age = 2018 - median_year_builtE) %>%

select(!ends_with("M")) %>%

rename_with(.fn = ~str_remove(.x, "E$")) %>%

na.omit()The calculation of the pop_density column appears more complicated, so it is helpful to read it from the inside out. The st_area() function from the sf package calculates the area of the Census tract; by default this will be in square meters, using the base measurement unit of the data’s coordinate reference system. The total_population column is then divided by the area of the tract. Next, the set_units() function is used to convert the measurement to population per square kilometer using "1/km2". Finally, the calculation is converted from a units vector to a numeric vector with as.numeric(). Calculating median structure age is more straightforward, as the median_year_builtE column is subtracted from 2017, the mid-point of the 5-year ACS period from which our data are derived. Finally, to simplify the dataset, margin of error columns are dropped, the E at the end of the estimate columns is removed with rename_with(), and tracts with NA values are dropped as well with na.omit().

We can then examine our modified dataset:

| GEOID | median_value | median_rooms | total_population | median_age | median_year_built | median_income | pct_college | pct_foreign_born | pct_white | percent_ooh | geometry | pop_density | median_structure_age |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 48085030101 | 183600 | 6.0 | 2296 | 31.9 | 1994 | 63036 | 8.6 | 16.5 | 65.4 | 85.5 | MULTIPOLYGON (((787828.3 21… | 19.05013 | 24 |

| 48085030102 | 198500 | 6.0 | 2720 | 45.5 | 1995 | 65234 | 19.2 | 20.0 | 68.8 | 78.6 | MULTIPOLYGON (((784027.9 21… | 13.43726 | 23 |

| 48085030201 | 324200 | 7.4 | 3653 | 35.1 | 2002 | 85938 | 41.3 | 4.9 | 77.0 | 79.2 | MULTIPOLYGON (((774843.6 21… | 39.45911 | 16 |

| 48085030202 | 366900 | 7.3 | 3530 | 42.1 | 2001 | 134097 | 40.9 | 8.3 | 72.5 | 88.3 | MULTIPOLYGON (((764886.2 21… | 22.95549 | 17 |

| 48085030204 | 217200 | 6.2 | 6592 | 32.7 | 2006 | 98622 | 51.8 | 9.7 | 63.0 | 63.6 | MULTIPOLYGON (((778099.2 21… | 417.34182 | 12 |

8.2.4 A first regression model

After inspecting the distribution of the outcome variable and completing feature engineering with respect to the predictors, we are ready to fit a first linear model. Our linear model with a log-transformed outcome variable can be written as follows:

\[ \begin{align*}\operatorname{log(median\_value)} &= \alpha + \beta_{1}(\operatorname{median\_rooms}) + \beta_{2}(\operatorname{median\_income})\ + \\&\quad \beta_{3}(\operatorname{pct\_college}) + \beta_{4}(\operatorname{pct\_foreign\_born}) + \\&\quad\beta_{5}(\operatorname{pct\_white})\ + \beta_{6}(\operatorname{median\_age}) + \\&\quad\beta_{7}(\operatorname{median\_structure\_age}) + \beta_{8}(\operatorname{percent\_ooh})\ + \\&\quad \beta_{9}(\operatorname{pop\_density}) + \beta_{10}(\operatorname{total\_population}) + \epsilon\end{align*} \] where \(\alpha\) is the model intercept, \(\beta_{1}\) is the change in the log of median home value with a 1-unit increase in the median number of rooms (and so forth for all the model predictors) while holding all other predictors constant, and \(\epsilon\) is the error term.

Model formulas in R are generally written as outcome ~ predictor_1 + predictor_2 + ... + predictor_k, where k is the number of model predictors. The formula can be supplied as a character string to the model function (as shown below) or supplied unquoted in the call to the function. We use lm() to fit the linear model, and then check the results with summary().

formula <- "log(median_value) ~ median_rooms + median_income + pct_college + pct_foreign_born + pct_white + median_age + median_structure_age + percent_ooh + pop_density + total_population"

model1 <- lm(formula = formula, data = dfw_data_for_model)

summary(model1)##

## Call:

## lm(formula = formula, data = dfw_data_for_model)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.03015 -0.14250 0.00033 0.14794 1.45712

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 1.123e+01 6.199e-02 181.093 < 2e-16 ***

## median_rooms 8.800e-03 1.058e-02 0.832 0.405711

## median_income 5.007e-06 4.202e-07 11.915 < 2e-16 ***

## pct_college 1.325e-02 5.994e-04 22.108 < 2e-16 ***

## pct_foreign_born 2.877e-03 8.005e-04 3.594 0.000336 ***

## pct_white 3.961e-03 4.735e-04 8.365 < 2e-16 ***

## median_age 4.782e-03 1.372e-03 3.485 0.000507 ***

## median_structure_age 1.202e-05 2.585e-05 0.465 0.642113

## percent_ooh -4.761e-03 5.599e-04 -8.504 < 2e-16 ***

## pop_density -7.946e-06 6.160e-06 -1.290 0.197216

## total_population 8.960e-06 4.460e-06 2.009 0.044733 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.2695 on 1548 degrees of freedom

## Multiple R-squared: 0.7818, Adjusted R-squared: 0.7804

## F-statistic: 554.6 on 10 and 1548 DF, p-value: < 2.2e-16The printed summary gives us information about the model fit. The Estimate column represents the model parameters (the \(\beta\) values), followed by the standard error, the t-value test statistic, and the p-value which helps us assess relative statistical significance. James et al. (2013) (p. 67) provides a concise summary of how p-values should be interpreted:

Roughly speaking, we interpret the p-value as follows: a small p-value indicates that it is unlikely to observe such a substantial association between the predictor and the response (outcome variable) due to chance, in the absence of any real association between the predictor and the response. Hence, if we see a small p-value, then we can infer that there is an association between the predictor and the response. We reject the null hypothesis – that is, we declare a relationship to exist between X and Y – if the p-value is small enough.

By convention, researchers will use p-value cutoffs of 0.05, 0.01, or 0.001, depending on the topic under study; these values are highlighted with asterisks in the model summary printout. Examining the model parameters and p-values suggests that median household income, bachelor’s degree attainment, the percentage non-Hispanic white population, and median age are positively associated with median home values, whereas the percentage owner-occupied housing is negatively associated with median home values. The R-squared value is 0.78, suggesting that our model explains around 78 percent of the variance in median_value.

Somewhat surprisingly, median_rooms does not appear to have a significant relationship with median home value as per the model. On the one hand, this can be interpreted as “the effect of median rooms on median home value with all other predictors held constant” - but also could be suggestive of model mis-specification. As mentioned earlier, models using ACS data as predictors are highly vulnerable to collinearity. Collinearity occurs when two or more predictors are highly correlated with one another, which can lead to misinterpretation of the actual relationships between predictors and the outcome variable.

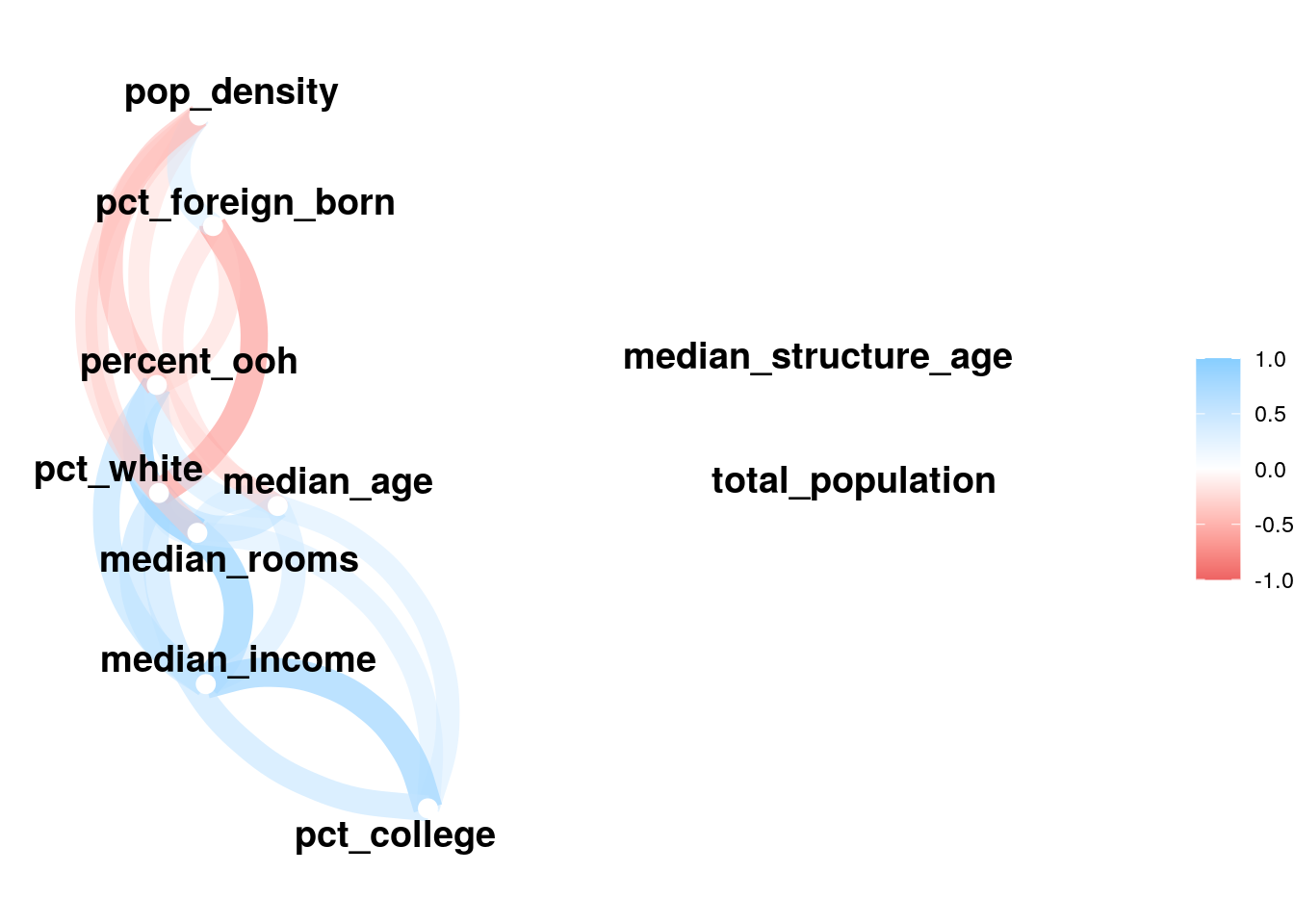

One way to inspect for collinearity is to visualize the correlation matrix of the predictors, in which correlations between all predictors are calculated with one another. The correlate() function in the corrr package (Kuhn, Jackson, and Cimentada 2020) offers a straightforward method for calculating a correlation matrix over a rectangular data frame.

library(corrr)

dfw_estimates <- dfw_data_for_model %>%

select(-GEOID, -median_value, -median_year_built) %>%

st_drop_geometry()

correlations <- correlate(dfw_estimates, method = "pearson")One calculated, the correlation matrix can be visualized with network_plot():

network_plot(correlations)

Figure 8.5: Network plot of correlations between model predictors

We notice that most of the predictors are correlated with one another to some degree, which is unsurprising given that they all represent social demographic data. Collinearity can be diagnosed further by calculating the variance inflation factor (VIF) for the model, which takes into account not just pairwise correlations but the extent to which predictors are collinear with all other predictors. A VIF value of 1 indicates no collinearity; VIF values above 5 suggest a level of collinearity that has a problematic influence on model interpretation (James et al. 2013). VIF is implemented by the vif() function in the car package (Fox and Weisberg 2019).

## median_rooms median_income pct_college

## 5.450436 6.210615 3.722434

## pct_foreign_born pct_white median_age

## 2.013411 3.233142 1.833625

## median_structure_age percent_ooh pop_density

## 1.055760 3.953587 1.537508

## total_population

## 1.174613The most problematic variable is median_income, with a VIF value of over 6. A potential solution involves removing this variable and re-running the model; as it is highly correlated with other predictors in the model, the effect of median household income would in theory be captured by the remaining predictors.

formula2 <- "log(median_value) ~ median_rooms + pct_college + pct_foreign_born + pct_white + median_age + median_structure_age + percent_ooh + pop_density + total_population"

model2 <- lm(formula = formula2, data = dfw_data_for_model)

summary(model2)##

## Call:

## lm(formula = formula2, data = dfw_data_for_model)

##

## Residuals:

## Min 1Q Median 3Q Max

## -1.91753 -0.15318 -0.00224 0.16192 1.58948

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 1.101e+01 6.203e-02 177.566 < 2e-16 ***

## median_rooms 7.326e-02 9.497e-03 7.713 2.18e-14 ***

## pct_college 1.775e-02 4.862e-04 36.506 < 2e-16 ***

## pct_foreign_born 4.170e-03 8.284e-04 5.034 5.38e-07 ***

## pct_white 4.996e-03 4.862e-04 10.274 < 2e-16 ***

## median_age 3.527e-03 1.429e-03 2.468 0.0137 *

## median_structure_age 2.831e-05 2.696e-05 1.050 0.2939

## percent_ooh -3.888e-03 5.798e-04 -6.705 2.81e-11 ***

## pop_density -5.474e-06 6.430e-06 -0.851 0.3947

## total_population 9.711e-06 4.658e-06 2.085 0.0373 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.2815 on 1549 degrees of freedom

## Multiple R-squared: 0.7618, Adjusted R-squared: 0.7604

## F-statistic: 550.4 on 9 and 1549 DF, p-value: < 2.2e-16The model R-squared drops slightly but not substantially to 0.76. Notably, the effect of median_rooms on median home value now comes through as strongly positive and statistically significant, suggesting that collinearity with median household income was suppressing this relationship in the first model. As a diagnostic, we can re-compute the VIF for the second model:

vif(model2)## median_rooms pct_college pct_foreign_born

## 4.025411 2.245227 1.976425

## pct_white median_age median_structure_age

## 3.124400 1.822825 1.052805

## percent_ooh pop_density total_population

## 3.885779 1.535763 1.174378The VIF values for all predictors in the model are now below 5.

8.2.5 Dimension reduction with principal components analysis

In the example above, dropping median household income from the model had a fairly negligible impact on the overall model fit and significantly improved the model’s problems with collinearity. However, this will not always be the best solution for analysts, especially when dropping variables has a more significant impact on the model fit. An alternative approach to resolving problems with collinearity is dimension reduction, which transforms the predictors into a series of dimensions that represent the variance in the predictors but are uncorrelated with one another. Dimension reduction is also a useful technique when an analyst is dealing with a massive number of predictors (hundreds or even thousands) and needs to reduce the predictors in the model to a more manageable number while still retaining the ability to explain the variance in the outcome variable.

One of the most popular methods for dimension reduction is principal components analysis. Principal components analysis (PCA) reduces a higher-dimensional dataset into a lower-dimensional representation based linear combinations of the variables used. The first principal component is the linear combination of variables that explains the most overall variance in the data; the second principal component explains the second-most overall variance but is also constrained to be uncorrelated with the first component; and so forth.

PCA can be computed with the prcomp() function. We will use the dfw_estimates object that we used to compute the correlation data frame here as it includes only the predictors in the regression model, and use the notation formula = ~. to compute PCA over all the predictors. By convention, scale. and center should be set to TRUE as this normalizes the variables in the dataset before computing PCA given that they are measured differently.

## Importance of components:

## PC1 PC2 PC3 PC4 PC5 PC6 PC7

## Standard deviation 2.020 1.1832 1.1307 1.0093 0.89917 0.70312 0.67686

## Proportion of Variance 0.408 0.1400 0.1278 0.1019 0.08085 0.04944 0.04581

## Cumulative Proportion 0.408 0.5481 0.6759 0.7778 0.85860 0.90803 0.95385

## PC8 PC9 PC10

## Standard deviation 0.48099 0.36127 0.31567

## Proportion of Variance 0.02314 0.01305 0.00997

## Cumulative Proportion 0.97698 0.99003 1.00000Printing the summary() of the PCA model shows 10 components that collectively explain 100% of the variance in the original predictors. The first principal component explains 40.8 percent of the overall variance; the second explains 14 percent; and so forth.

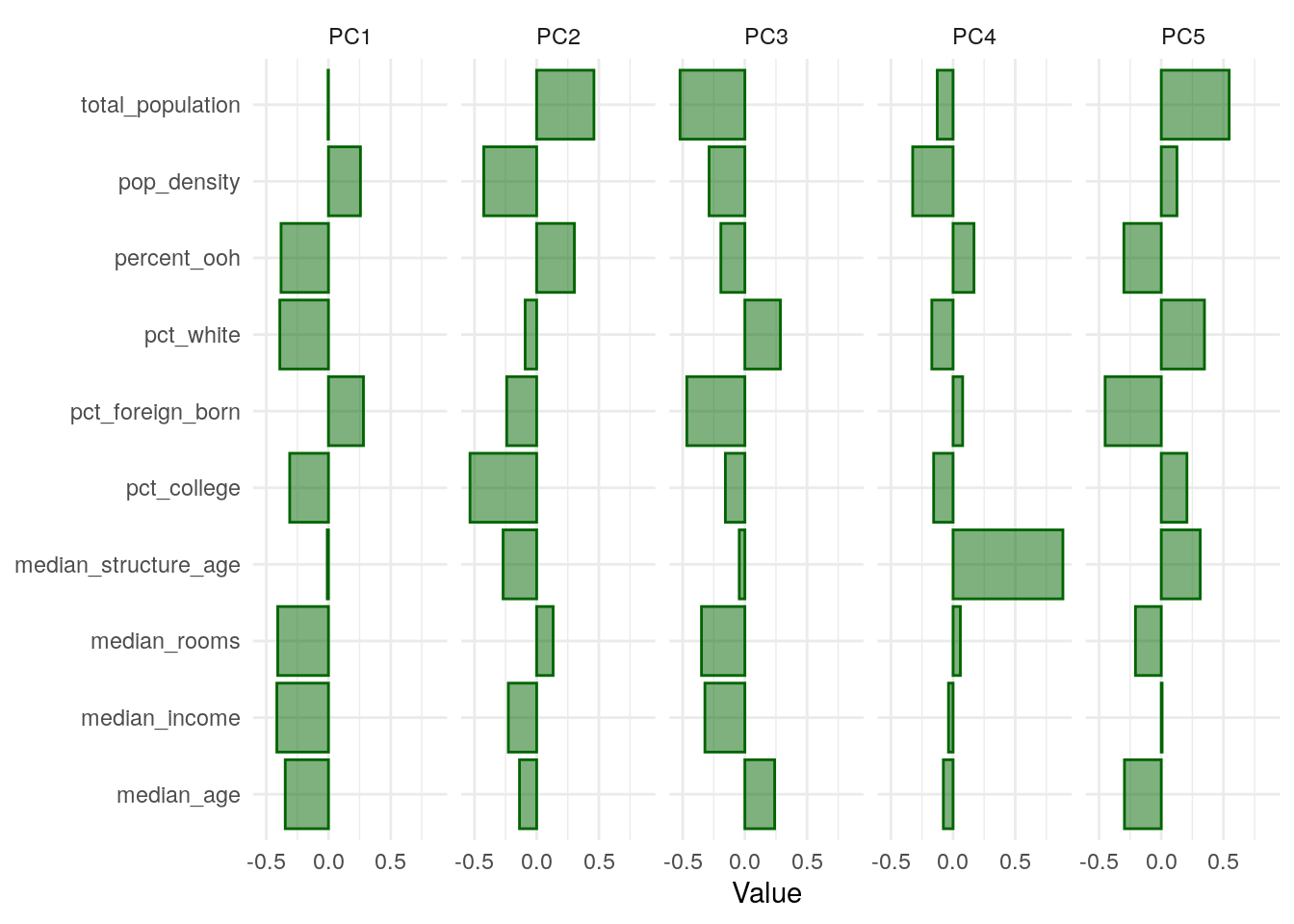

To understand what the different principal components now mean, it is helpful to plot the variable loadings. This represents the relationships between the original variables in the model and the derived components. This approach is derived from Julia Silge’s blog post on the topic (Silge 2021).

First, the variable loading matrix (stored in the rotation element of the pca object) is converted to a tibble so we can view it easier.

| predictor | PC1 | PC2 | PC3 | PC4 | PC5 | PC6 | PC7 | PC8 | PC9 | PC10 |

|---|---|---|---|---|---|---|---|---|---|---|

| median_rooms | -0.4077852 | 0.1323021 | -0.3487253 | 0.0586440 | -0.2076430 | 0.2123836 | -0.1609126 | -0.2069992 | 0.4346406 | 0.5876075 |

| total_population | -0.0032288 | 0.4604789 | -0.5213433 | -0.1272021 | 0.5458555 | -0.4347310 | 0.0936404 | -0.0506824 | -0.0371959 | -0.0173050 |

| median_age | -0.3474694 | -0.1380008 | 0.2393805 | -0.0781325 | -0.2960241 | -0.7849378 | -0.1486493 | -0.2533379 | 0.0605217 | -0.0558195 |

| median_income | -0.4149988 | -0.2277427 | -0.3213461 | -0.0367450 | 0.0067868 | 0.2005786 | 0.1163622 | -0.0635095 | 0.3147651 | -0.7171939 |

| pct_college | -0.3116212 | -0.5358812 | -0.1567328 | -0.1562603 | 0.2061776 | 0.0588802 | 0.3496196 | -0.1984163 | -0.5235463 | 0.2916531 |

| pct_foreign_born | 0.2812618 | -0.2409164 | -0.4667693 | 0.0769341 | -0.4514510 | -0.2779627 | 0.3495310 | 0.4664939 | 0.0962084 | 0.0945663 |

| pct_white | -0.3910590 | -0.0924262 | 0.2855863 | -0.1708498 | 0.3472563 | -0.0631469 | -0.0042362 | 0.7224557 | 0.2354127 | 0.1607084 |

| percent_ooh | -0.3812989 | 0.3031944 | -0.1930025 | 0.1680217 | -0.3006266 | 0.0753161 | -0.3374642 | 0.3233115 | -0.6051618 | -0.1285341 |

| pop_density | 0.2571344 | -0.4253855 | -0.2876627 | -0.3247835 | 0.1261611 | -0.0196740 | -0.7385667 | 0.0538260 | 0.0011908 | 0.0034392 |

| median_structure_age | -0.0095808 | -0.2700238 | -0.0450257 | 0.8829973 | 0.3131336 | -0.1369883 | -0.1609821 | 0.0036929 | 0.0443381 | 0.0238885 |

Positive values for a given row mean that the original variable is positively loaded onto a given component, and negative values mean that the variable is negatively loaded. Larger values in each direction are of the most interest to us; values near 0 mean the variable is not meaningfully explained by a given component. To explore this further, we can visualize the first five components with ggplot2:

pca_tibble %>%

select(predictor:PC5) %>%

pivot_longer(PC1:PC5, names_to = "component", values_to = "value") %>%

ggplot(aes(x = value, y = predictor)) +

geom_col(fill = "darkgreen", color = "darkgreen", alpha = 0.5) +

facet_wrap(~component, nrow = 1) +

labs(y = NULL, x = "Value") +

theme_minimal()

Figure 8.6: Loadings for first five principal components

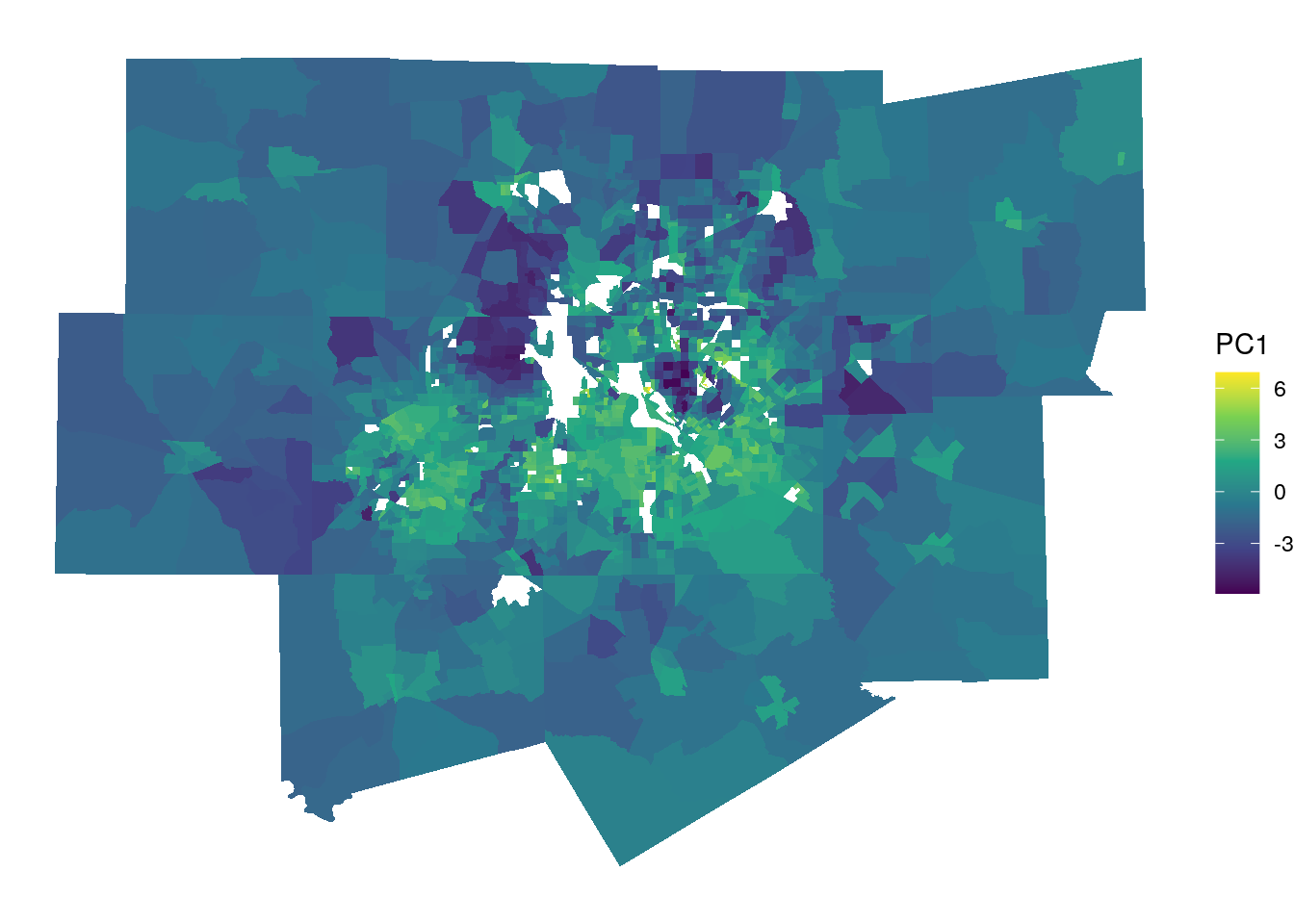

With respect to PC1, which explains nearly 41 percent of the variance in the overall predictor set, the variables percent_ooh, pct_white, pct_college, median_rooms, median_income, and median_age load negatively, whereas pop_density and pct_foreign_born load positively. We can attach these principal components to our original data with predict() and cbind(), then make a map of PC1 for further exploration:

components <- predict(pca, dfw_estimates)

dfw_pca <- dfw_data_for_model %>%

select(GEOID, median_value) %>%

cbind(components)

ggplot(dfw_pca, aes(fill = PC1)) +

geom_sf(color = NA) +

theme_void() +

scale_fill_viridis_c()

Figure 8.7: Map of principal component 1

The map, along with the bar chart, helps us understand how the multiple variables represent latent social processes at play in Dallas-Fort Worth. The brighter yellow areas, which have higher values for PC1, are located in communities like east Fort Worth, east Arlington, Grand Prairie, and south Dallas. Generally speaking, these are low-to-middle income areas with larger nonwhite populations. The locations with the lowest values for PC1 are Southlake (northeast of Fort Worth) and Highland Park (north of downtown Dallas); these communities are segregated, predominantly non-Hispanic white, and are among the wealthiest neighborhoods in the entire United States. In turn, PC1 captures the gradient that represents these social differences, with which multiple demographic characteristics will be associated.

These principal components can be used for principal components regression, in which the derived components themselves are used as model predictors. Generally, components should be chosen that account for at least 90 percent of the original variance in the predictors, though this will often be up to the discretion of the analyst. In the example below, we will fit a model using the first six principal components and the log of median home value as the outcome variable.

pca_formula <- paste0("log(median_value) ~ ",

paste0('PC', 1:6, collapse = ' + '))

pca_model <- lm(formula = pca_formula, data = dfw_pca)

summary(pca_model)##

## Call:

## lm(formula = pca_formula, data = dfw_pca)

##

## Residuals:

## Min 1Q Median 3Q Max

## -1.78888 -0.16854 -0.00726 0.16941 1.60089

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 12.301439 0.007483 1643.902 <2e-16 ***

## PC1 -0.180706 0.003706 -48.765 <2e-16 ***

## PC2 -0.247181 0.006326 -39.072 <2e-16 ***

## PC3 -0.077097 0.006621 -11.645 <2e-16 ***

## PC4 -0.084417 0.007417 -11.382 <2e-16 ***

## PC5 0.111525 0.008325 13.397 <2e-16 ***

## PC6 0.003787 0.010646 0.356 0.722

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.2955 on 1552 degrees of freedom

## Multiple R-squared: 0.737, Adjusted R-squared: 0.736

## F-statistic: 724.9 on 6 and 1552 DF, p-value: < 2.2e-16The model fit, as represented by the R-squared value, is similar to the models fit earlier in this chapter. One possible disadvantage of principal components regression, however, is the interpretation of the results as the different variables which are comprehensible on their own are now spread across the components. It can be helpful to think of the different components as indices in this sense.

As discussed above, PC1 represents a gradient from segregated, older, wealthy, white communities on the low end to more diverse, lower-income, and younger communities on the high end; this PC is negatively associated with median home values, with tracks with expectations. Reviewing the plot above, PC2 is associated with lower population densities and levels of educational attainment; in turn, it can be thought of as an urban (on the low end) to rural (on the high end) gradient. A negative association with median home value is then expected as home values are higher in the urban core than on the rural fringe of the metropolitan area.

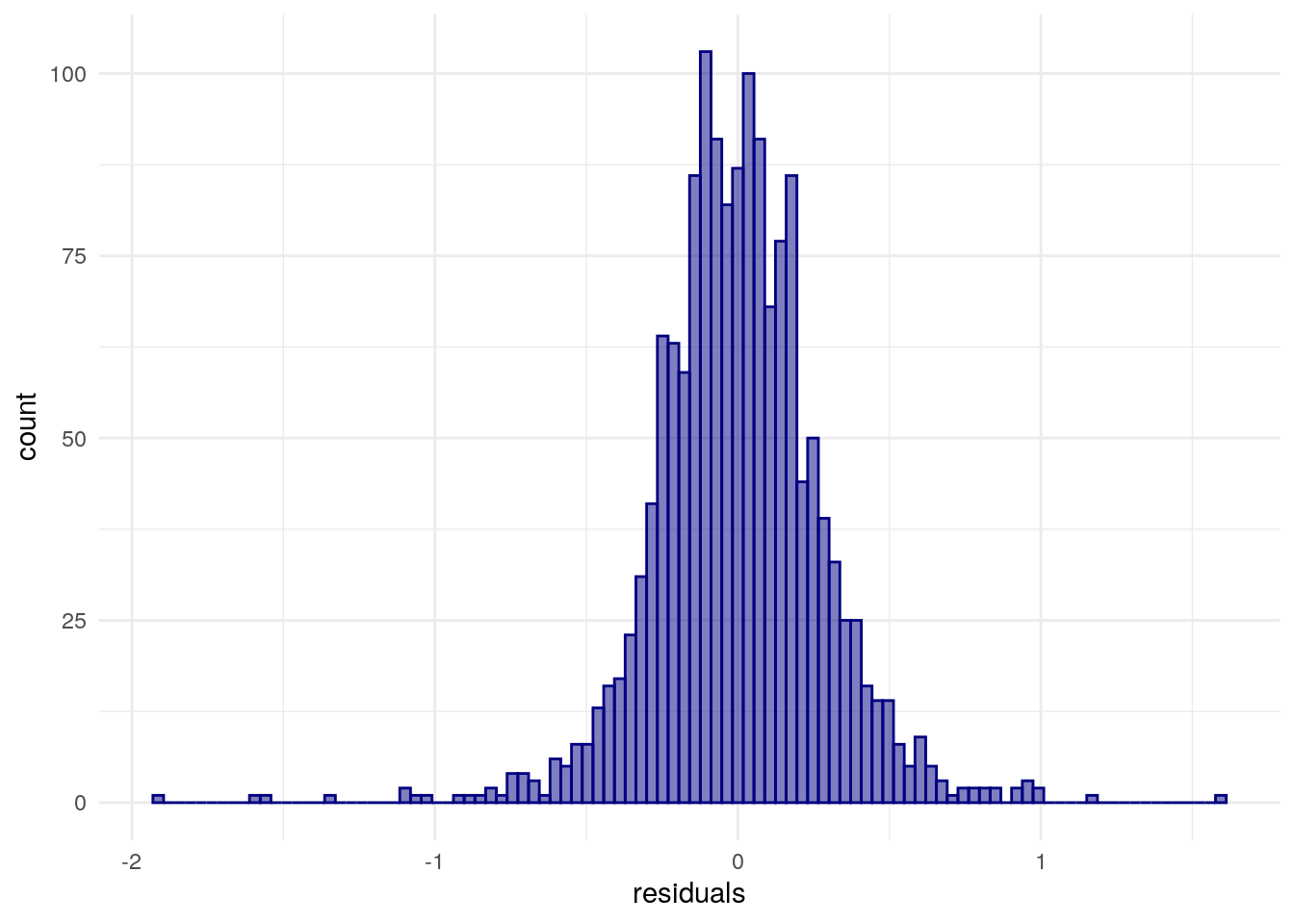

8.3 Spatial regression

A core assumption of the linear model is that the errors are independent of one another and normally distributed. Log-transforming the right-skewed outcome variable, median home values, was intended to resolve the latter; we can check this by adding the residuals for model2 to our dataset and drawing a histogram to check its distribution.

dfw_data_for_model$residuals <- residuals(model2)

ggplot(dfw_data_for_model, aes(x = residuals)) +

geom_histogram(bins = 100, alpha = 0.5, color = "navy",

fill = "navy") +

theme_minimal()

Figure 8.8: Distribution of model residuals with a ggplot2 histogram

The former assumption of independence of residuals is commonly violated in models that use spatial data, however. This is because models of spatial processes commonly are characterized by spatial autocorrelation in the error term, meaning that the model’s performance itself depends on geographic location. We can assess this using techniques learned in the previous chapter such as Moran’s \(I\).

library(spdep)

wts <- dfw_data_for_model %>%

poly2nb() %>%

nb2listw()

moran.test(dfw_data_for_model$residuals, wts)##

## Moran I test under randomisation

##

## data: dfw_data_for_model$residuals

## weights: wts

##

## Moran I statistic standard deviate = 14.023, p-value < 2.2e-16

## alternative hypothesis: greater

## sample estimates:

## Moran I statistic Expectation Variance

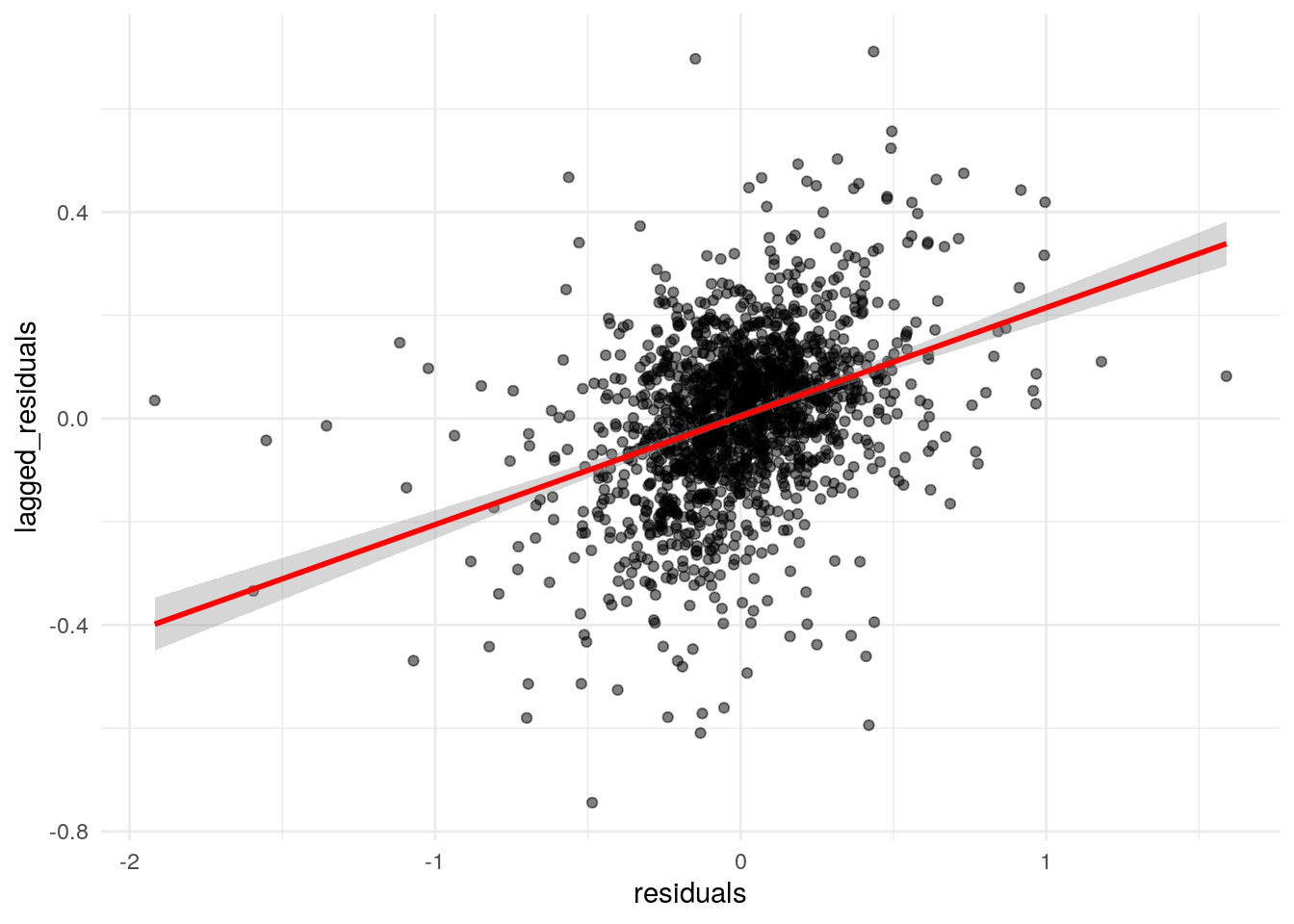

## 0.2101748515 -0.0006418485 0.0002259981The Moran’s \(I\) test statistic is modest and positive (0.21) but is statistically significant. This can be visualized with a Moran scatterplot:

dfw_data_for_model$lagged_residuals <- lag.listw(wts, dfw_data_for_model$residuals)

ggplot(dfw_data_for_model, aes(x = residuals, y = lagged_residuals)) +

theme_minimal() +

geom_point(alpha = 0.5) +

geom_smooth(method = "lm", color = "red")

Figure 8.9: Moran scatterplot of residual spatial autocorrelation

The plot illustrates the positive spatial autocorrelation in the residuals, suggesting that the assumption of independence in the model error term is violated. To resolve this issue, we can turn to spatial regression methods.

8.3.1 Methods for spatial regression

The field of spatial econometrics is broadly concerned with the estimation and specification of models that are appropriate for handling spatial dependence in statistical processes. In general, two families of models are used to address these concerns with respect to regression: spatial lag models and spatial error models.

8.3.1.1 Spatial lag models

Spatial lag models account for spatial dependence by including a spatial lag of the outcome variable in the model. In doing so, it accounts for spatial spillover effects – the possibility that values in neighboring areas have an influence on values in a given location. A spatial lag model can be written as follows (Rey, Arribas-Bel, and Wolf 2020):

\[ {Y_i} = \alpha + \rho{Y_{lag-i}} + \sum_k \beta_k X_{ki} + \epsilon_i, \]

where

\[ Y_{lag-i} = \sum\limits_{j}w_{ij}Y_j \]

with \(w_{ij}\) representing the spatial weights. In this notation, \(\rho\) is the parameter measuring the effect of the spatial lag in the outcome variable, and \(k\) is the number of predictors in the model. However, the inclusion of a spatially lagged outcome variable on the right-hand side of the equation violates the exogeneity assumption of the linear model. In turn, special methods are required for estimating the spatial lag model, which are implemented in R in the spatialreg package (R. S. Bivand, Pebesma, and Gomez-Rubio 2013). Below, we use the function lagsarlm() to estimate the relationship between logged median home value and its predictors as a spatial lag model:

library(spatialreg)

lag_model <- lagsarlm(

formula = formula2,

data = dfw_data_for_model,

listw = wts

)

summary(lag_model, Nagelkerke = TRUE)##

## Call:lagsarlm(formula = formula2, data = dfw_data_for_model, listw = wts)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.0647421 -0.1377312 -0.0032552 0.1386914 1.4820482

##

## Type: lag

## Coefficients: (asymptotic standard errors)

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) 7.0184e+00 2.6898e-01 26.0927 < 2.2e-16

## median_rooms 6.2027e-02 8.8554e-03 7.0045 2.480e-12

## pct_college 1.2858e-02 5.4696e-04 23.5083 < 2.2e-16

## pct_foreign_born 2.0118e-03 7.7482e-04 2.5964 0.009420

## pct_white 2.7112e-03 4.7183e-04 5.7461 9.133e-09

## median_age 3.4421e-03 1.3163e-03 2.6150 0.008922

## median_structure_age 2.6093e-05 2.4827e-05 1.0510 0.293267

## percent_ooh -3.0428e-03 5.4316e-04 -5.6021 2.118e-08

## pop_density -1.3573e-05 5.9323e-06 -2.2879 0.022143

## total_population 8.3762e-06 4.2928e-06 1.9512 0.051031

##

## Rho: 0.35319, LR test value: 210.86, p-value: < 2.22e-16

## Asymptotic standard error: 0.023376

## z-value: 15.109, p-value: < 2.22e-16

## Wald statistic: 228.29, p-value: < 2.22e-16

##

## Log likelihood: -125.2882 for lag model

## ML residual variance (sigma squared): 0.067179, (sigma: 0.25919)

## Nagelkerke pseudo-R-squared: 0.79193

## Number of observations: 1559

## Number of parameters estimated: 12

## AIC: 274.58, (AIC for lm: 483.43)

## LM test for residual autocorrelation

## test value: 6.9225, p-value: 0.0085118The general statistical relationships observed in the non-spatial model are preserved in the spatial lag model, though the effect sizes (the model parameters) are smaller, illustrating the importance of controlling for the spatial lag. Additionally, the \(\rho\) parameter is positive and statistically significant, suggesting the presence of spatial spillover effects. This finding makes practical sense, as median home values may be influenced by the values of homes in neighboring Census tracts along with the characteristics of the neighborhood itself. The argument Nagelkerke = TRUE computes a pseudo-R-squared value, which is slightly higher than the corresponding value for the non-spatial model.

8.3.1.2 Spatial error models

In contrast with spatial lag models, spatial error models include a spatial lag in model’s error term. This is designed to capture latent spatial processes that are not currently being accounted for in the model estimation and in turn show up in the model’s residuals. The spatial error model can be written as follows:

\[ Y_i = \alpha + \sum\limits_k\beta_kX_{ki} + u_i, \]

where

\[ u_i = \lambda u_{lag-i} + \epsilon_i \]

and

\[ u_{lag-i} = \sum\limits_jw_{ij}u_j \]

Like the spatial lag model, estimating the spatial error model requires special methods, implemented with the errorsarlm() function in spatialreg.

error_model <- errorsarlm(

formula = formula2,

data = dfw_data_for_model,

listw = wts

)

summary(error_model, Nagelkerke = TRUE)##

## Call:errorsarlm(formula = formula2, data = dfw_data_for_model, listw = wts)

##

## Residuals:

## Min 1Q Median 3Q Max

## -1.97990245 -0.13702534 -0.00030105 0.13933507 1.54937871

##

## Type: error

## Coefficients: (asymptotic standard errors)

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) 1.1098e+01 6.6705e-02 166.3753 < 2.2e-16

## median_rooms 8.2815e-02 9.7089e-03 8.5298 < 2.2e-16

## pct_college 1.5857e-02 5.7427e-04 27.6120 < 2.2e-16

## pct_foreign_born 3.6601e-03 9.6570e-04 3.7901 0.0001506

## pct_white 4.6754e-03 6.1175e-04 7.6426 2.132e-14

## median_age 3.9346e-03 1.4130e-03 2.7845 0.0053605

## median_structure_age 2.6093e-05 2.5448e-05 1.0254 0.3051925

## percent_ooh -4.7538e-03 5.6726e-04 -8.3803 < 2.2e-16

## pop_density -1.4999e-05 6.8731e-06 -2.1823 0.0290853

## total_population 1.0497e-05 4.4668e-06 2.3499 0.0187796

##

## Lambda: 0.46765, LR test value: 164.17, p-value: < 2.22e-16

## Asymptotic standard error: 0.031997

## z-value: 14.615, p-value: < 2.22e-16

## Wald statistic: 213.61, p-value: < 2.22e-16

##

## Log likelihood: -148.6309 for error model

## ML residual variance (sigma squared): 0.067878, (sigma: 0.26053)

## Nagelkerke pseudo-R-squared: 0.7856

## Number of observations: 1559

## Number of parameters estimated: 12

## AIC: 321.26, (AIC for lm: 483.43)The \(\lambda\) (lambda) value is large and statistically significant, again illustrating the importance of accounting for spatial autocorrelation in the model.

8.3.2 Choosing between spatial lag and spatial error models

The spatial lag and spatial error models offer alternative approaches to accounting for processes of spatial autocorrelation when fitting models. This raises the question: which one of the two models should the analyst choose? On the one hand, this should be thought through in the context of the topic under study. For example, if spatial spillover effects are related to the hypotheses being evaluated by the analysts (e.g. the effect of neighboring home values on focal home values), a spatial lag model may be preferable; alternatively, if there are spatially autocorrelated factors that likely influence the outcome variable but are difficult to measure quantitatively (e.g. discrimination and racial bias in the housing market), a spatial error model might be preferred.

The two types of models can also be evaluated with respect to some quantitative metrics. For example, we can re-compute Moran’s \(I\) over the model residuals to see if the spatial model has resolved our problems with spatial dependence. First, we’ll check the spatial lag model:

moran.test(lag_model$residuals, wts)##

## Moran I test under randomisation

##

## data: lag_model$residuals

## weights: wts

##

## Moran I statistic standard deviate = 2.0436, p-value = 0.0205

## alternative hypothesis: greater

## sample estimates:

## Moran I statistic Expectation Variance

## 0.0300648348 -0.0006418485 0.0002257748Next, the spatial error model:

moran.test(error_model$residuals, wts)##

## Moran I test under randomisation

##

## data: error_model$residuals

## weights: wts

##

## Moran I statistic standard deviate = -1.6126, p-value = 0.9466

## alternative hypothesis: greater

## sample estimates:

## Moran I statistic Expectation Variance

## -0.0248732656 -0.0006418485 0.0002257849Both models reduce Moran’s \(I\); however, the error model does a better job of eliminating spatial autocorrelation in the residuals entirely. We can also use Lagrange multiplier tests to evaluate the appropriateness of these models together (Anselin et al. 1996). These tests check for spatial error dependence, whether a spatially lagged dependent variable is missing, and the robustness of each in the presence of the other.

The lm.LMtests() function can be used with an input linear model to compute these tests. We’ll use model2, the home value model with median household income omitted, to compute the tests.

lm.LMtests(

model2,

wts,

test = c("LMerr", "LMlag", "RLMerr", "RLMlag")

)##

## Lagrange multiplier diagnostics for spatial dependence

##

## data:

## model: lm(formula = formula2, data = dfw_data_for_model)

## weights: wts

##

## LMerr = 194.16, df = 1, p-value < 2.2e-16

##

##

## Lagrange multiplier diagnostics for spatial dependence

##

## data:

## model: lm(formula = formula2, data = dfw_data_for_model)

## weights: wts

##

## LMlag = 223.37, df = 1, p-value < 2.2e-16

##

##

## Lagrange multiplier diagnostics for spatial dependence

##

## data:

## model: lm(formula = formula2, data = dfw_data_for_model)

## weights: wts

##

## RLMerr = 33.063, df = 1, p-value = 8.921e-09

##

##

## Lagrange multiplier diagnostics for spatial dependence

##

## data:

## model: lm(formula = formula2, data = dfw_data_for_model)

## weights: wts

##

## RLMlag = 62.276, df = 1, p-value = 2.998e-15All test statistics are large and statistically significant; in this case, the robust versions of the statistics should be compared. While both the lag and error models would be appropriate for this data, the test statistic for the robust version of the lag model is larger, suggesting that the spatial lag model should be preferred over the spatial error model in this example.

8.4 Geographically weighted regression

The models addressed in the previous sections – both the regular linear model and its spatial adaptations – estimate global relationships between the outcome variable, median home values, and its predictors. This lends itself to conclusions like “In the Dallas-Fort Worth metropolitan area, higher levels of educational attainment are associated with higher median home values.” However, metropolitan regions like Dallas-Fort Worth are diverse and multifaceted. It is possible that a relationship between a predictor and the outcome variable that is observed for the entire region on average may vary significantly from neighborhood to neighborhood. This type of phenomenon is called spatial non-stationarity, and can be explored with geographically weighted regression, or GWR (Brunsdon, Fotheringham, and Charlton 1996).

GWR is a technique designed to evaluate local variations in the results of regression models given a kernel (distance-decay) weighting function. Following Lu et al. (2014), the basic form of GWR for a given location \(i\) can be written as:

\[ Y_i = \alpha_i + \sum\limits_{k=1}^m\beta_{ik}X_{ik} + \epsilon_i \]

where the model intercept, parameters, and error term are all location-specific. Notably, \(\beta_{ik}\) represents a local regression coefficient for predictor \(k\) (of the total number of predictors \(m\)) that is specific to location \(i\).

GWR is implemented in the GWmodel R package (Gollini et al. 2015) as well as the spgwr package (R. Bivand and Yu 2020). These packages offer an interface to a wider family of geographically weighted methods, such as binomial, generalized linear, and robust geographically weighted regression; geographically weighted PCA; and geographically weighted summary statistics. The example below will adapt the regression model used in earlier examples to a locally-variation model with GWR.

8.4.1 Choosing a bandwidth for GWR

GWR relies on the concept of a “kernel bandwidth” to compute the local regression model for each location. A kernel bandwidth is based on the kernel type (fixed or adaptive) and a distance-decay function. A fixed kernel uses a cutoff distance to determine which observations will be included in the local model for a given location \(i\), whereas an adaptive kernel uses the nearest neighbors to a given location. In most circumstances with Census tract data where the size of tracts in a region will vary widely, an adaptive kernel will be preferred to a fixed kernel to ensure consistency of neighborhoods across the region. The distance-decay function then governs how observations will be weighted in the local model relative to their distance from location \(i\). Closer tracts to \(i\) will have a greater influence on the results for location \(i\), with influence falling with distance.

Bandwidth sizes (either a distance cutoff or number of nearest neighbors) can be selected directly by the user; in the GWmodel R package, the bw.gwr() function also helps analysts choose an appropriate kernel bandwidth using cross-validation. The code below computes a bandwidth bw using this method. Note that we must first convert our data to a legacy SpatialPolygonsDataFrame object from the sp package as GWmodel does not yet support sf objects.

library(GWmodel)

library(sf)

dfw_data_sp <- dfw_data_for_model %>%

as_Spatial()

bw <- bw.gwr(

formula = formula2,

data = dfw_data_sp,

kernel = "bisquare",

adaptive = TRUE

)bw.gwr() chose 187 as the number of nearest neighbors based on cross-validation. This means that for each Census tract, the nearest 187 of the total 1559 Census tracts in the Dallas-Fort Worth region will be used to estimate the local model, with weights calculated using the bisquare distance-decay function as follows:

\[ w_{ij} = 1-(\frac{d_{ij}^2}{h^2})^2 \]

where \(d_{ij}\) is the distance between local observation \(i\) and neighbor \(j\), and \(h\) is the kernel bandwidth. As we are using an adaptive kernel, \(h\) will vary for each observation and take on the distance between location \(i\) and its “neighbor” furthest from that location.

8.4.2 Fitting and evaluating the GWR model

The basic form of GWR can be fit with the gwr.basic() function, which uses a similar argument structure to other models fit in this chapter. The formula can be passed to the formula parameter as a character string; we’ll use the original formula with median household income omitted and include it below for a refresher. The derived bandwidth from bw.gwr() will be used with a bisquare, adaptive kernel.

formula2 <- "log(median_value) ~ median_rooms + pct_college + pct_foreign_born + pct_white + median_age + median_structure_age + percent_ooh + pop_density + total_population"

gw_model <- gwr.basic(

formula = formula2,

data = dfw_data_sp,

bw = bw,

kernel = "bisquare",

adaptive = TRUE

)Printing the object gw_model will show both the results of the global model and the ranges of the locally varying parameter estimates. The model object itself has the following elements:

names(gw_model)## [1] "GW.arguments" "GW.diagnostic" "lm" "SDF"

## [5] "timings" "this.call" "Ftests"Each element provides information about the model fit, but perhaps most interesting to the analyst is the SDF element, in this case a SpatialPolygonsDataFrame containing mappable model results. We will extract that element from the model object and convert it to simple features, then take a look at the columns in the object.

## [1] "Intercept" "median_rooms"

## [3] "pct_college" "pct_foreign_born"

## [5] "pct_white" "median_age"

## [7] "median_structure_age" "percent_ooh"

## [9] "pop_density" "total_population"

## [11] "y" "yhat"

## [13] "residual" "CV_Score"

## [15] "Stud_residual" "Intercept_SE"

## [17] "median_rooms_SE" "pct_college_SE"

## [19] "pct_foreign_born_SE" "pct_white_SE"

## [21] "median_age_SE" "median_structure_age_SE"

## [23] "percent_ooh_SE" "pop_density_SE"

## [25] "total_population_SE" "Intercept_TV"

## [27] "median_rooms_TV" "pct_college_TV"

## [29] "pct_foreign_born_TV" "pct_white_TV"

## [31] "median_age_TV" "median_structure_age_TV"

## [33] "percent_ooh_TV" "pop_density_TV"

## [35] "total_population_TV" "Local_R2"

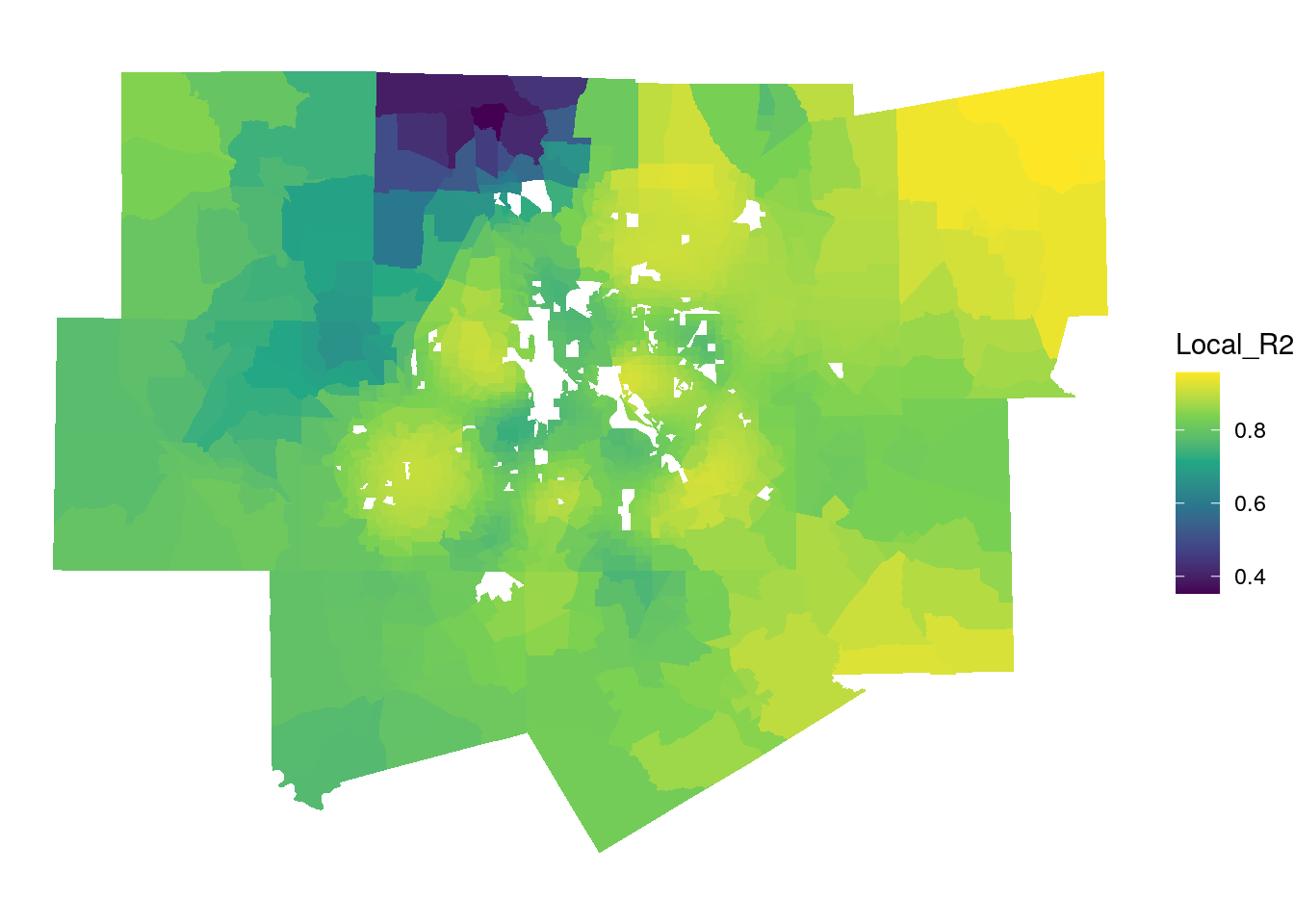

## [37] "geometry"The sf object includes columns with local parameter estimates, standard errors, and t-values for each predictor, along with local diagnostic elements such as the Local R-squared, giving information about how well a model performs in any particular location. Below, we use ggplot2 and geom_sf() to map the local R-squared, though it may be useful as well to use mapview::mapview(gw_model_results, zcol = "Local_R2" and explore these results interactively.

ggplot(gw_model_results, aes(fill = Local_R2)) +

geom_sf(color = NA) +

scale_fill_viridis_c() +

theme_void()

Figure 8.10: Local R-squared values from the GWR model

The map suggests that the model performs very well in Fort Worth, Collin County, and the eastern edge of the metropolitan area, with local R-squared values exceeding 0.9. It performs worse in northwestern Denton County and in other more rural areas to the west of Fort Worth.

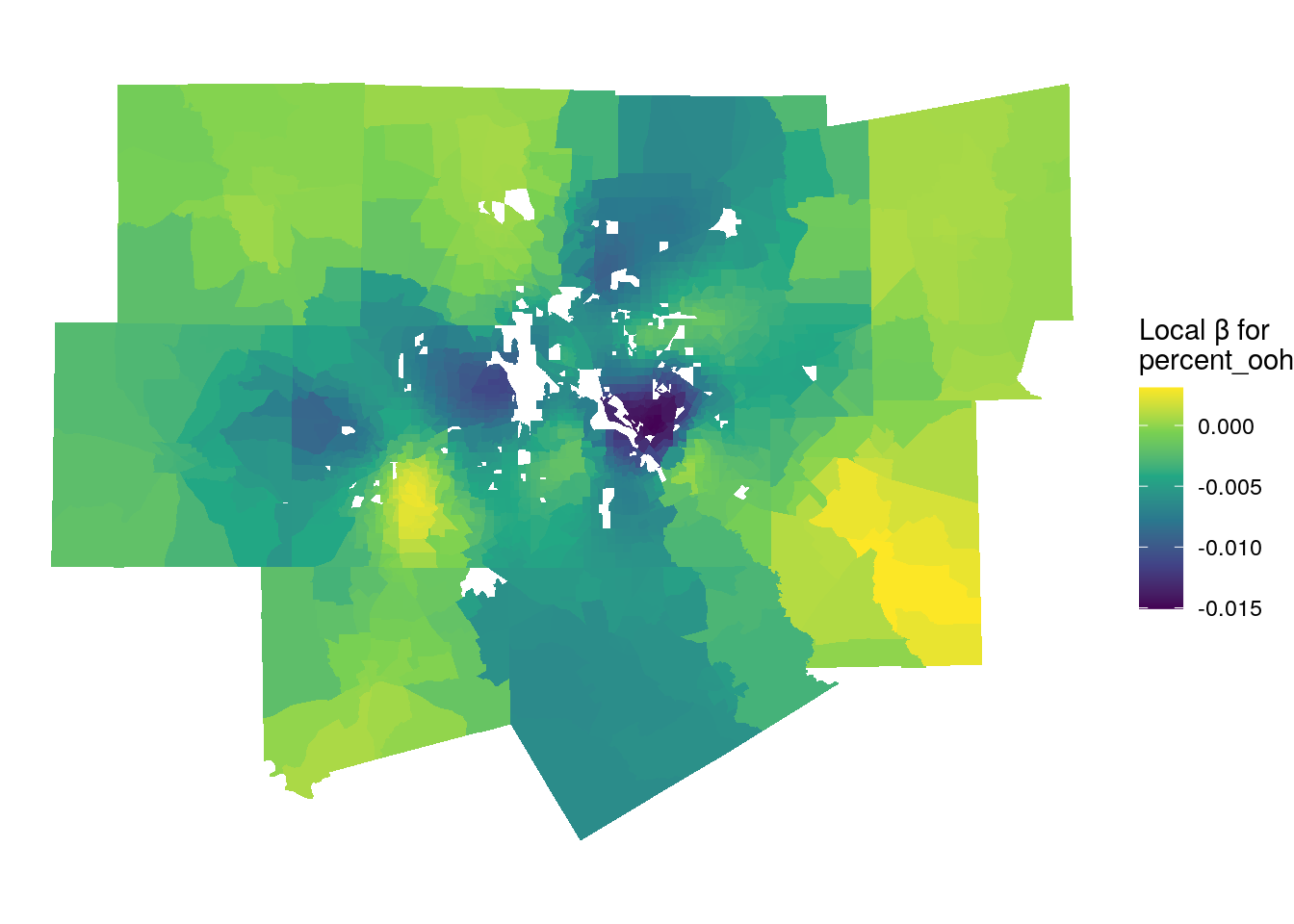

We can examine locally varying parameter estimates in much the same way. The first map below visualizes the local relationships between the percentage owner-occupied housing and median home values. Recall from the global model that this coefficient was negative and statistically significant.

ggplot(gw_model_results, aes(fill = percent_ooh)) +

geom_sf(color = NA) +

scale_fill_viridis_c() +

theme_void() +

labs(fill = "Local β for \npercent_ooh")

Figure 8.11: Local parameter estimates for percent owner-occupied housing

The dark purple areas on the map are those areas where the global relationship in the model reflects the local relationship, as local parameter estimates are negative. The areas that stick out include the high-density area of uptown Dallas, where renter-occupied housing is common and median home values are very high. However, in rural areas on the fringe of the metropolitan area this relationship reverses, returning in some cases positive parameter estimates (the yellow parts of the map). This means that for those local areas, a greater percentage of owner-occupied housing is associated with higher home values.

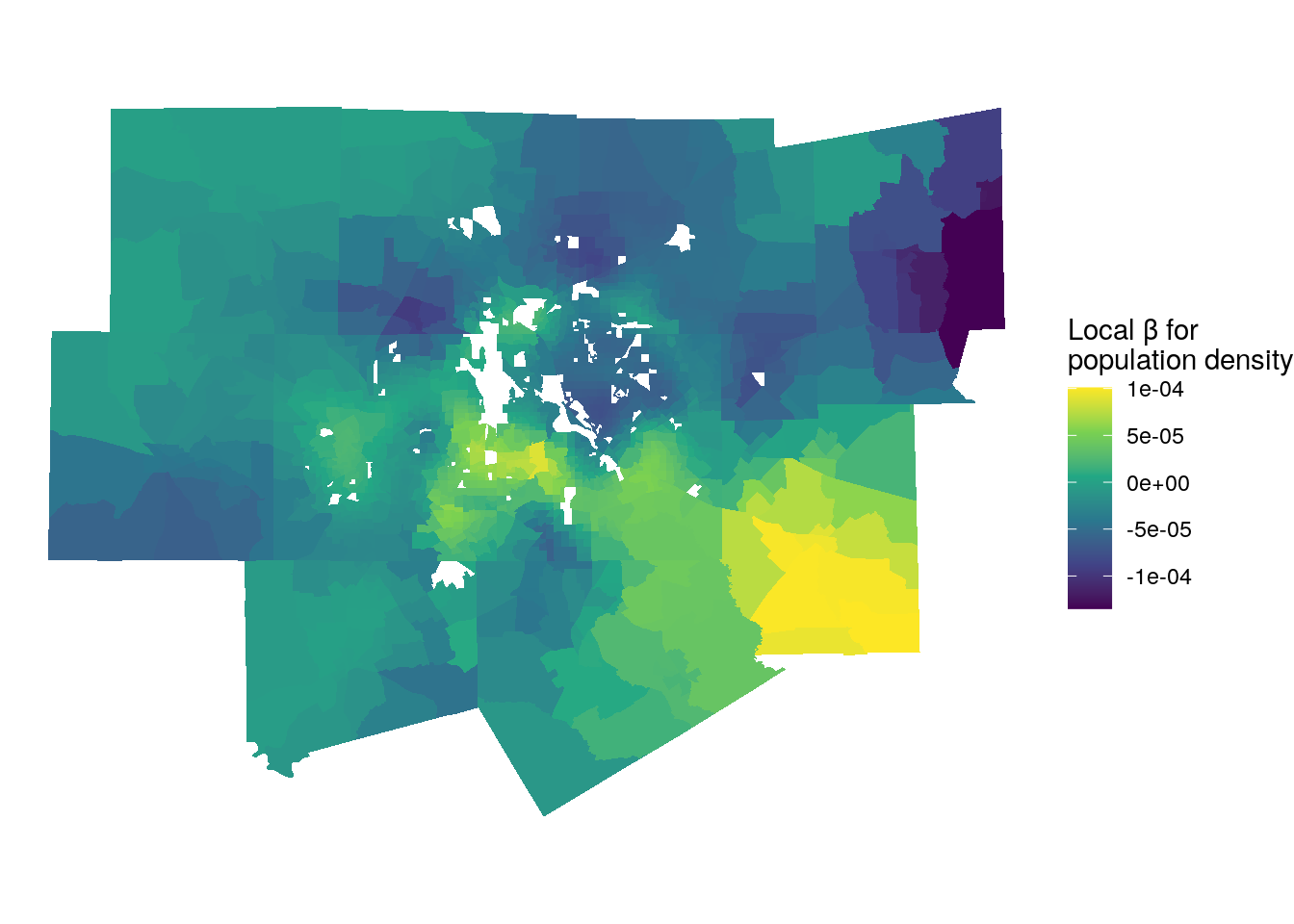

We can explore this further by investigating the local parameter estimates for population density, which was not significant in the global model:

ggplot(gw_model_results, aes(fill = pop_density)) +

geom_sf(color = NA) +

scale_fill_viridis_c() +

theme_void() +

labs(fill = "Local β for \npopulation density")

Figure 8.12: Local parameter estimates for population density

For large portions of the metropolitan area, the relationship between population density and median home values is negligible, which is what drives the global relationship. However, we do observe variations in different parts of the metropolitan area. Bright yellow locations are those where high population densities are associated with higher home values. Conversely, the darker blue and purple areas represent several affluent enclaves or suburbs of Dallas and Fort Worth where lower densities are associated with higher home values.

8.4.3 Limitations of GWR

While GWR is an excellent method for exploring spatial non-stationarity in regression model results, it does have some limitations. When the spgwr package itself is loaded into your R environment, it prints the following warning:

NOTE: This package does not constitute approval of GWR as a method of spatial analysis.

Why would an R package itself warn the user about its use? GWR is particularly susceptible to problems that plague other regression models using spatial data. Earlier sections in this chapter covered the topic of collinearity, where parameter estimates are biased due to high correlations between predictors. Given that predictor values tend to cluster spatially, GWR models often suffer from local multicollinearity where predictors are highly correlated in local areas.

Additionally, the impact of edge effects can be acute in GWR models. Edge effects - which are present in most spatial models and analysis techniques - refer to misleading results for observations on the edge of a dataset. In the example used in this chapter, the Dallas-Fort Worth metropolitan area represents the region under study. This artificially restricts the neighborhoods around Census tracts on the edge of the metropolitan area to only those tracts that are also within the metropolitan area, and omits the rural tracts that border them. For local models, this is a particular problem as results for observations on the edge of an area are based on incomplete information that may not reflect the true circumstances of that location.

With these concerns in mind, GWR is generally recommended as an exploratory technique that serves as a useful companion to the estimation of a global model. For example, a global parameter estimate may suggest that in Dallas-Fort Worth, median home values tend to be higher in areas with lower percentages of owner-occupied housing, controlling for other predictors in the model. GWR, used as a follow-up, helps the analyst understand that this global relationship is driven by higher home values near to urban cores such as in the uptown Dallas area, and may not necessarily characterize the dynamics of rural and exurban areas elsewhere in the metropolitan region.

8.5 Classification and clustering of ACS data

The statistical models discussed earlier in this chapter were fit for the purpose of understanding relationships between an outcome variable and a series of predictors. In the social sciences, such models are generally used for inference, where a researcher tests those hypotheses with respect to those relationships to understand social processes. In industry, regression models are commonly used instead for prediction, where a model is trained on the relationship between an observed outcome and predictors then used to make predictions on out-of-sample data. In machine learning terminology, this is referred to as supervised learning, where the prediction target is known.

In other cases, the researcher is interested in discovering the structure of a dataset and generating meaningful labels for it rather than making predictions based on a known outcome. This type of approach is termed unsupervised machine learning. This section will explore two common applications of unsupervised machine learning with respect to demographic data: geodemographic classification, which identifies “clusters” of similar areas based on common demographic characteristics, and regionalization, which partitions an area into salient regions that are both spatially contiguous and share common demographic attributes.

8.5.1 Geodemographic classification

Geodemographic classification refers to the grouping of geographic observations based on similar demographic (or other) characteristics (Singleton and Spielman 2013). It is commonly used to generate neighborhood “typologies” that can help explain general similarities and differences among neighborhoods in a broader region. While the geodemographic approach has been criticized for essentializing neighborhoods (Goss 1995), it is also widely used to understand dynamics of urban systems (Vicino, Hanlon, and Short 2011) and has been proposed as a possible solution to problems with large margins of error for individual variables in the ACS (Spielman and Singleton 2015). In industry, geodemographics are widely used for marketing and customer segmentation purposes. Popular frameworks include Esri’s Tapestry Segmentation and Experian’s Mosaic product.

While the exact methodology to produce a geodemographic classification system varies from implementation to implementation, the general process used involves dimension reduction applied to a high-dimensional input dataset of model features, followed by a clustering algorithm to partition observations into groups based on the derived dimensions. As we have already employed principal components analysis for dimension reduction on the Dallas-Fort Worth dataset, we can re-use those components for this purpose.

The k-means clustering algorithm is one of the most common unsupervised algorithms used to partition data in this way. K-means works by attempting to generate \(K\) clusters that are internally similar but dissimilar from other clusters. Following James et al. (2013) and Boehmke and Greenwell (2019), the goal of K-means clustering can be written as:

\[ \underset{C_1...C_k}{\text{minimize}}\left \{ \sum\limits_{k=1}^KW(C_k) \right \} \]

where the within-cluster variation \(W(C_k)\) is computed as

\[ W(C_k) = \sum_{x_i \in C_k}(x_{i} - \mu_k) ^ 2 \]

with \(x_i\) representing an observation in the cluster \(C_k\) and \(\mu_k\) representing the mean value of all observations in cluster \(C_k\).

To compute k-means, the analyst must first choose the number of desired clusters, represented with \(k\). The analyst then specifies \(k\) initial “centers” from the data (this is generally done at random) to seed the algorithm. The algorithm then iteratively assigns observations to clusters until the total within-cluster variation is minimized, returning a cluster solution.

In R, k-means can be computed with the kmeans() function. This example solution will generate 6 cluster groups. Given that the algorithm relies on random seeding of the cluster centers, set.seed() should be used to ensure stability of the solution.

set.seed(1983)

dfw_kmeans <- dfw_pca %>%

st_drop_geometry() %>%

select(PC1:PC8) %>%

kmeans(centers = 6)

table(dfw_kmeans$cluster)##

## 1 2 3 4 5 6

## 456 193 172 83 228 427The algorithm has partitioned the data into six clusters; the smallest (Cluster 4) has 83 Census tracts, whereas the largest (Cluster 3) has 456 Census tracts. At this stage, it is useful to explore the data in both geographic space and variable space to understand how the clusters differ from one another. We can assign the cluster ID to the original dataset as a new column and map it with geom_sf().

dfw_clusters <- dfw_pca %>%

mutate(cluster = as.character(dfw_kmeans$cluster))

ggplot(dfw_clusters, aes(fill = cluster)) +

geom_sf(size = 0.1) +

scale_fill_brewer(palette = "Set1") +

theme_void() +

labs(fill = "Cluster ")

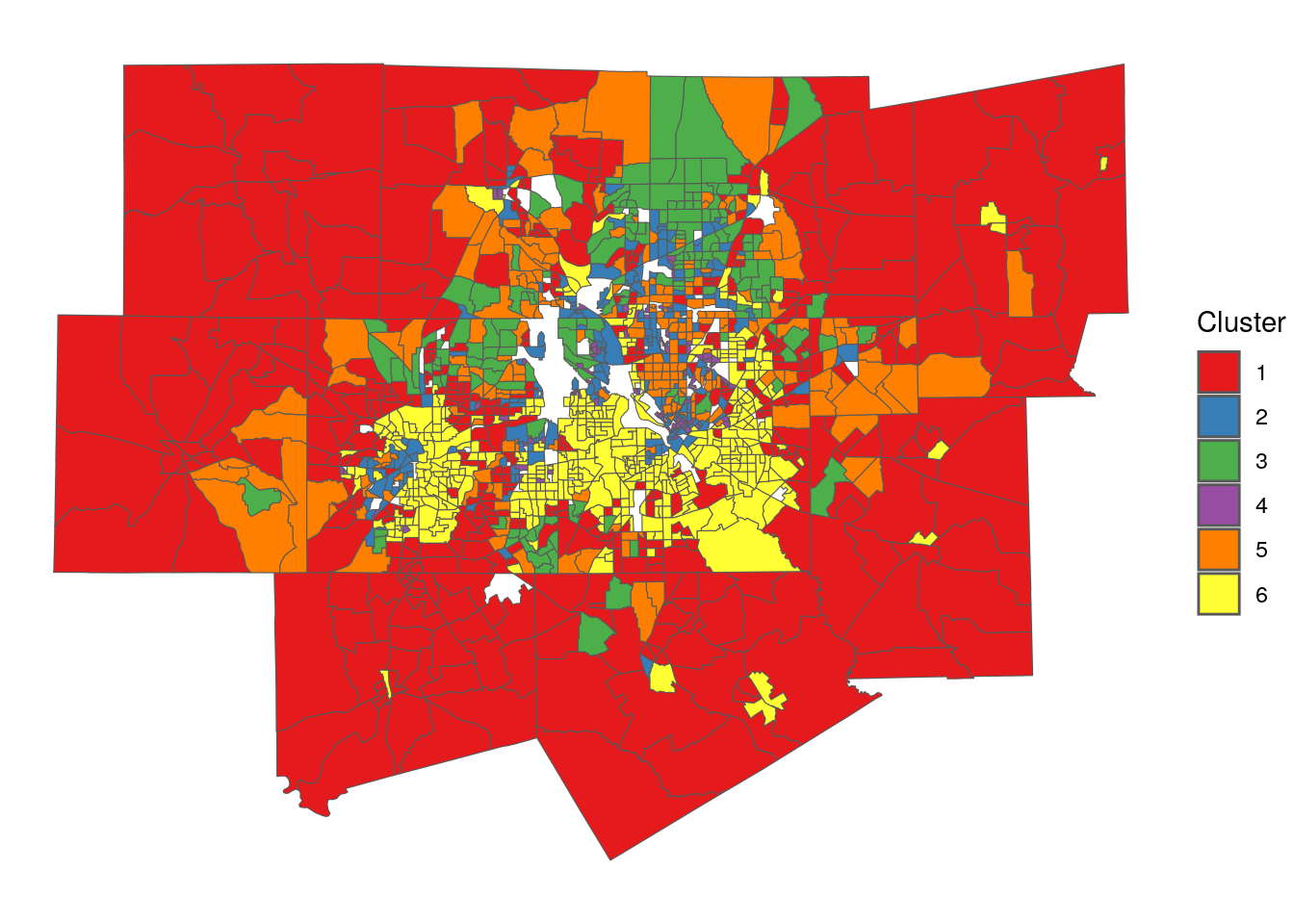

Figure 8.13: Map of geodemographic clusters in Dallas-Fort Worth

Some notable geographic patterns in the clusters are evident from the map, even if the viewer does not have local knowledge of the Dallas-Fort Worth region. Cluster 1 represents more rural communities on the edges of the metropolitan area, whereas Cluster 6 tends to be located in the core counties of Tarrant and Dallas as well as higher-density tracts in outer counties. Cluster 2 covers both big-city downtowns of Fort Worth and Dallas along with a scattering of suburban tracts.

A useful companion visualization to the map is a color-coded scatterplot using two of the principal components in the PCA dataset. We will use the two components discussed as “indices” in Section 8.2.5: PC1, which is a gradient from affluent/older/white to lower-income/younger/nonwhite, and PC2, which represents areas with high population densities and educational attainment on the low end to lower-density, less educated areas on the high end. Given the data density, ggplotly() from the plotly package will convert the scatterplot to a graphic with an interactive legend, allowing the analyst to turn cluster groups on and off.

library(plotly)

cluster_plot <- ggplot(dfw_clusters,

aes(x = PC1, y = PC2, color = cluster)) +

geom_point() +

scale_color_brewer(palette = "Set1") +

theme_minimal()

ggplotly(cluster_plot) %>%

layout(legend = list(orientation = "h", y = -0.15,

x = 0.2, title = "Cluster"))Figure 8.14: Interactive scatterplot of PC1 and PC3 colored by cluster

While all clusters overlap one another to some degree, each occupies distinct feature space. Double-click any cluster in the legend to isolate it. Cluster 1, which covers much of the rural fringe of Dallas-Fort Worth, scores very high on the “rurality” index PC2 (low density / educational attainment) and modestly negative on the “diversity” index PC1. Cluster 2, which includes the two downtowns, scores lower on the “rurality” index PC2 but scores higher on the “diversity” index PC1. A geodemographic analyst may adopt these visualization approaches to explore the proposed typology in greater depth, and aim to produce informative “labels” for each cluster.

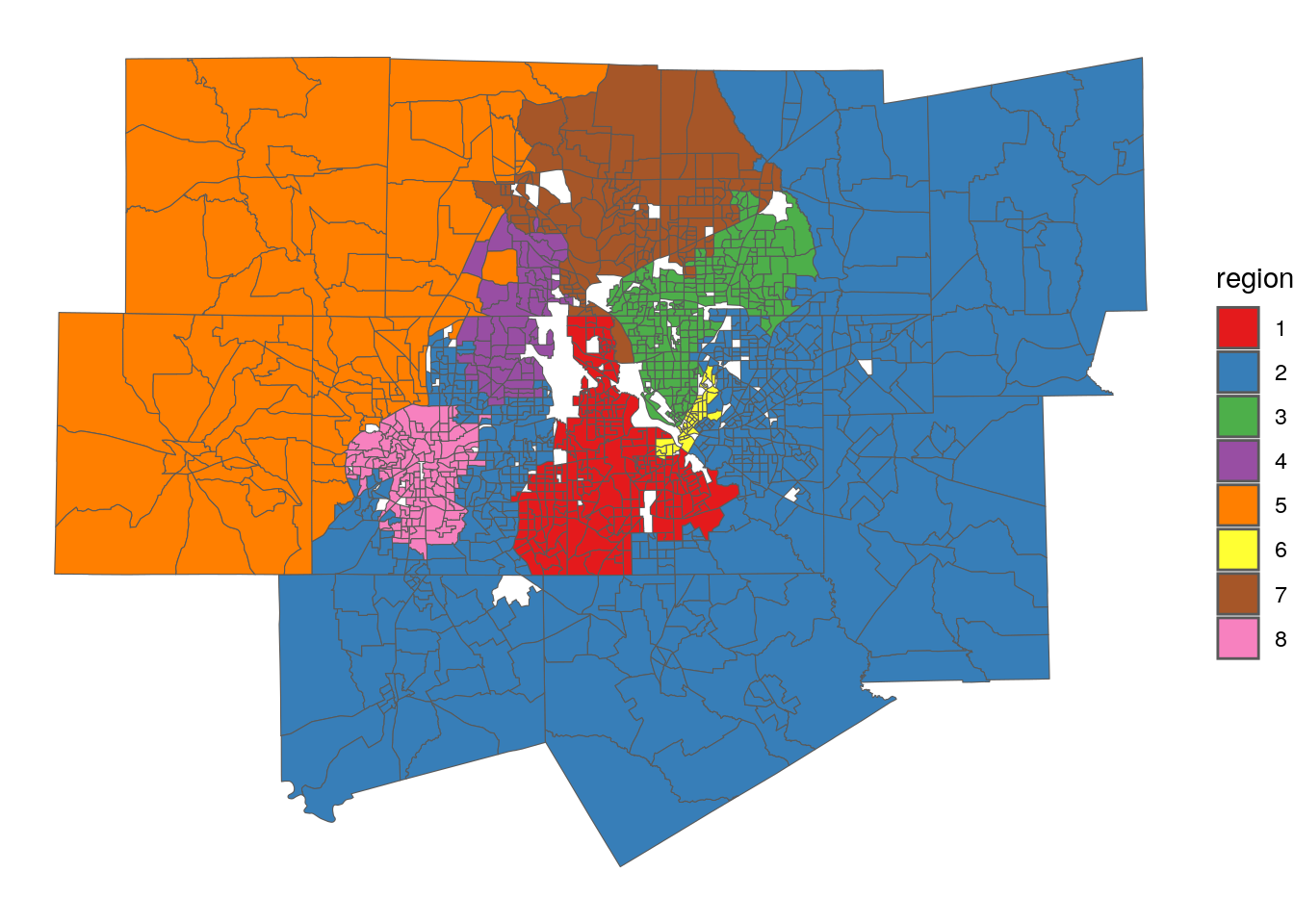

8.5.2 Spatial clustering & regionalization